Research/Study

Anti-LGBTQ Twitter account seems inspired by Florida Gov.’s Press-Sec

Christina Pushaw, who used “grooming” smears to justify Florida’s “Don’t Say Gay” law, said Libs of TikTok “truly opened her eyes”

By Kayla Gogarty | WASHINGTON – After Florida Republican Gov. Ron DeSantis’ press secretary Christina Pushaw used an anti-LGBTQ slander to defend Florida’s “Don’t Say Gay” bill on March 4, right-wing media and figures used similar absurd attacks to defend the legislation, accusing LGBTQ people of “grooming” children to be LGBTQ or to engage in sexual activity.

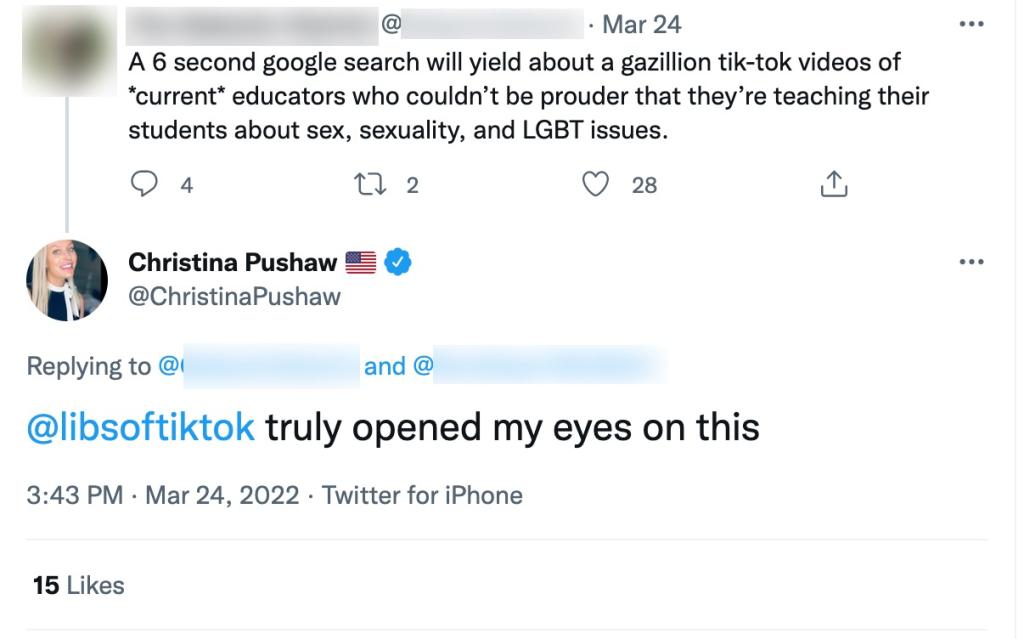

Recently, Pushaw credited anti-LGBTQ Twitter account “Libs of TikTok” with opening her eyes to the issue, and now Media Matters has found that Pushaw and the account have interacted with each other at least 138 times since June 2021.

On March 28, DeSantis signed the “Parental Rights in Education” bill, also known as the “Don’t Say Gay” bill, into law. This anti-LGBTQ legislation bans discussion of sexuality or gender identity in kindergarten through third grade, though its vague wording could be used to prevent such discussions — however broadly defined — at any grade level.

Throughout March, right-wing figures and media responded to criticism of the extreme bill with ramped up attacks accusing LGBTQ people of “grooming” children — the same messaging initially tweeted by Pushaw on March 4. Simultaneously, right-wing media and figures also used anti-LGBTQ content from Libs of TikTok as ammunition for their arguments.

Libs of TikTok is an anonymous anti-LGBTQ Twitter account that started on TikTok but moved to Twitter in November 2020, where it singles out individual TikTok users, including teachers, for ridicule and harassment in tweets that often go viral. On April 13, Twitter briefly suspended the account for violating its policy against hateful conduct. The account has since been restored — even though Libs of TikTok has repeatedly misgendered public figures and content creators.

Pushaw has been interacting with Libs of TikTok and promoting it since at least July 2021. The account pushed the same anti-LGBTQ messaging Pushaw has used, and Pushaw credited Libs of TikTok in a March 24 tweet with opening her eyes to educators teaching “about sex, sexuality, and LGBT issues.”

Media Matters analyzed tweets from Libs of TikTok and found that in addition to posting videos targeting LGBTQ people, the account specifically used “groomer”-related language in 46 tweets since November 2021. The tweets earned over 220,000 total interactions (replies, retweets, like, and quote tweets), or an average of nearly 5,000 interactions per tweet — almost double the average interaction of the account’s other tweets. Notably, the majority of the tweets with “groomer”-related language from Libs of TikTok — 24 out of 46 — were posted before March 4, when Pushaw tweeted similar rhetoric.

Pushaw has directly mentioned Libs of TikTok in 97 tweets, with 70 of them posted before her March 4 anti-LGBTQ tweets. Libs of TikTok similarly mentioned Pushaw in 41 tweets since June 2021, praising her as “one of the greatest accounts” on Twitter. (We coded tweets as “mentions” when a user replied to an account, tagged it in a tweet, or replied to another tweet which had tagged that account.)

Pushaw has also repeatedly praised Libs of TikTok, similarly calling it “one of the best accounts on here.” She has also encouraged other users to look at the account’s “evidence.”

Since Pushaw used “grooming” language in her tweets on March 4, she has tweeted similar language another 22 times, earning over 23,000 total interactions, or an average of 1,000 interactions per post. This average is roughly triple that of her other tweets. Meanwhile, Libs of TikTok has continued to post anti-LGBTQ content, even replying to a Pushaw tweet and praising the “Don’t Say Gay” legislation as “literally genius” for exposing “creeps” and those that “identify themselves as pro-grooming.”

Pushaw has continued to interact with Libs of TikTok since March 4, even recently asking for additional information on content that claims to expose Florida teachers and/or curriculum.

The influence of Libs of TikTok is particularly problematic, as the account is run by an anonymous user who openly espouses anti-LGBTQ rhetoric — such as calling LGBTQ identity “narcissism” based on “delusions” — has called for all openly LGBTQ teachers to be fired, and claimed in a recent interview that she is directly responsible for some “evil” teachers being fired already.

*********************

Kayla Gogarty is an Associate Research Director at Media Matters focusing on disinformation.

********************

The preceding article was previously published by Media Matters for America and is republished by permission.

Research/Study

LGBTQ people in LA County struggle with cost of living & safety

Approximately 665,000 LGBTQ adults live in Los Angeles County, according to new research from the Williams Institute

LOS ANGELES – Approximately 665,000 LGBTQ adults live in Los Angeles County, according to new research from the Williams Institute at UCLA School of Law that looks at the lived experiences and needs of LGBTQ people.

The majority (82%) believe that LA County is a good place for LGBTQ people to live and that elected officials are responsive to their needs. However, affording to live in LA County is their most common worry.

Over one-third (35%) of LGBTQ Angelenos live below 200% of the federal poverty level (FPL), including almost half (47%) of transgender and nonbinary people, and they experience high rates of food insecurity and housing instability.

Nearly one in three (32%) LGBTQ households in Los Angeles County and more than one in five (23%) non-LGBTQ households experienced food insecurity in the prior year. In addition, more than 60% of LGBTQ people live in households that are cost-burdened by housing expenses, spending 30% or more of their household income on housing. A quarter (26%) of LGBTQ people live in households where over 50% of the household’s monthly income is spent on rent or mortgage payments.

“LA County represents a promise of equality and freedom to LGBTQ people who live here and throughout the country,” said lead author Brad Sears, the Founding Executive Director at the Williams Institute. “But that promise is being undermined by the County’s rapidly escalating cost of living.”

This report used representative data collected from 1,006 LGBTQ adults in Los Angeles County who completed the 2023 Los Angeles County Health Survey (LACHS) conducted by the Los Angeles County Public Health Department. The data also included responses from 504 LGBTQ individuals who participated in the Lived Experiences in Los Angeles County (LELAC) Survey, a LACHS call-back survey developed by the Williams Institute.

More than half (51%) of LGBTQ adults said they have been verbally harassed, with 39% experiencing this in the past five years. As a result, one in five LGBTQ people have avoided public places such as businesses, parks, and public transportation in the last year. About 40% of LGBTQ people do not believe that law enforcement agencies in Los Angeles County treat LGBTQ people fairly.

A companion study published today surveyed 322 trans and nonbinary individuals in Los Angeles County. Results showed that the cost of living in LA County was the most significant concern for trans and nonbinary respondents, with 59% indicating that it is a serious problem. More than one-quarter (28%) of the participants were unemployed, compared to 5% of LA County overall.

The survey also revealed significant disparities in health and health care access, especially for trans and nonbinary adults who were women or transfeminine, immigrants, and those living at or near the FPL.

“Understanding the life experiences of trans and nonbinary people is important so that we can begin to improve the quality of our lives in LA County,” said study co-author Bamby Salcedo, President and CEO of the TransLatin@ Coalition. “Trans and nonbinary people are best suited to envision ways to support and uplift the community, along with trans-led organizations that have already been doing this work. LA County must commit to greater support of the organizations serving our community.”

Survey respondents were twice as likely as the general population of LA County to report having fair or poor health (27%), being uninsured (14%), and going without health care (46%).

“Despite a supportive policy environment in Los Angeles County, experiences of stigma and discrimination still exist and can hinder access to necessary resources for trans and nonbinary residents,” said lead author Jody Herman, Senior Scholar of Public Policy at the Williams Institute. “It is crucial for local officials and service providers to enact policies, provide education and training, and establish accountability to ensure respectful and positive interaction with the trans and nonbinary community.”

A third report focuses on LGBTQ people’s assessment of LA County programs and services and recommendations for local elected officials.

“These findings from the Williams Institute provide invaluable data that will guide our Board, County departments, and the inaugural LGBTQ+ Commission in shaping policies and programs to truly deliver for our diverse LGBTQ+ communities,” said LA County Board Chair Lindsey P. Horvath, who initiated the motion to present the findings to the Board.

“It’s especially critical that we support our trans, gender-nonconforming, and nonbinary communities, ensuring they feel safe and supported, and that they are able to afford to live in Los Angeles County. These insights will guide our essential and transformative work.”

“Many LGBTQ people provided recommendations for elected officials to improve quality of life in Los Angeles County,” said principal investigator Kerith J. Conron, Research Director at the Williams Institute. “LGBTQ people are asking for visible allyship, increased representation of LGBTQ individuals in elected positions and civil service, and housing and financial support.”

“These recent findings serve as a sobering reminder of the persistent barriers faced by the LGBT community in Los Angeles County,” said Dr. Barbara Ferrer, PhD, MPH, MEd, Director of Public Health. “These reports underscore the profound impact of the disparities on the health and wellbeing of a community that include our family members, colleagues, and friends. It is imperative that we not only acknowledge these inequities but actively engage in eliminating them. Through collaborative efforts with community leaders, policymakers, and the public, Public Health is committed to upholding principles of justice and equity by ensuring that every member of our community has the resources they need to thrive.”

Research/Study

New Polling: 65% of Black Americans support Black LGBTQ rights

73% of Gen Z respondents (between the ages of 12 and 27) “agree that the Black community should do more to support Black LGBTQ+ people”

WASHINGTON – The National Black Justice Coalition, a D.C.-based LGBTQ advocacy organization, announced on June 19 that it commissioned what it believes to be a first-of-its-kind national survey of Black people in the United States in which 65 percent said they consider themselves “supporters of Black LGBTQ+ people and rights,” with 57 percent of the supporters saying they were “churchgoers.”

In a press release describing the findings of the survey, NBJC said it commissioned the research firm HIT Strategies to conduct the survey with support from five other national LGBTQ organizations – the Human Rights Campaign, the National LGBTQ Task Force, the National Center for Lesbian Rights, Family Equality, and GLSEN.

“One of the first surveys of its kind, explicitly sampling Black people (1,300 participants) on Black LGBTQ+ people and issues – including an oversampling of Black LGBTQ+ participants to provide a more representative view of this subgroup – it investigates the sentiments, stories, perceptions, and priorities around Black values and progressive policies, to better understand how they impact Black views on Black LGBTQ+ people,” the press release says.

It says the survey found, among other things, that 73 percent of Gen Z respondents, who in 2024 are between the ages of 12 and 27, “agree that the Black community should do more to support Black LGBTQ+ people.”

According to the press release, it also found that 40 percent of Black people in the survey reported having a family member who identifies as LGBTQ+ and 80 percent reported having “some proximity to gay, lesbian, bisexual, or queer people, but only 42 percent have some proximity to transgender or gender-expansive people.”

The survey includes these additional findings:

• 86% of Black people nationally report having a feeling of shared fate and connectivity with other Black people in the U.S., but this view doesn’t fully extend to the Black LGBTQ+ community. Around half — 51% — of Black people surveyed feel a shared fate with Black LGBTQ+ people.

• 34% reported the belief that Black LGBTQ+ people “lead with their sexual orientation or gender identity.” Those participants were “significantly less likely to support the Black LGBTQ+ community and most likely to report not feeling a shared fate with Black LGBTQ+ people.”

• 92% of Black people in the survey reported “concern about youth suicide after being shown statistics about the heightened rate among Black LGBTQ+ youth.” Those expressing this concern included 83% of self-reported opponents of LGBTQ+ rights.

• “Black people’s support for LGBTQ+ rights can be sorted into three major groups: 29% Active Accomplices, 25% Passive Allies (high potential to be moved), 35% Opponents. Among Opponents, ‘competing priorities’ and ‘religious beliefs’ are the two most significant barriers to supporting Black LGBTQ+ people and issues.”

• 10% of the survey participants identified as LGBTQ. Among those who identified as LGBTQ, 38% identified as bisexual, 33% identified as lesbian or gay, 28% identified as non-binary or gender non-conforming, and 6% identified as transgender.

• Also, among those who identified as LGBTQ, 89% think the Black community should do more to support Black LGBTQ+ people, 69% think Black LGBTQ+ people have fewer rights and freedoms than other Black people, 35% think non-Black LGBTQ+ people have fewer rights and freedom than other Black people, 54% “feel their vote has a lot of power,” 51% live in urban areas, and 75% rarely or never attend church.

Additional information about the survey from NBJC can be accessed here.

Research/Study

63% of LGBTQ+ people have faced employment discrimination

The report’s findings also show 70% of LGBTQ+ people feel lonely, misunderstood, marginalized, or excluded at work

WASHINGTON -A newly released report on the findings of a survey of 2,000 people in the U.S. who identify as LGBTQ says 63 percent of respondents have faced workplace discrimination in their career, 45 percent reported being “passed over” for a promotion due to their LGBTQ status, and 30 percent avoid “coming out” at work due to fear of discrimination.

The report, called “Unequal Opportunities: LGBTQ+ Discrimination In The Workplace,” was conducted by EduBirdie, a company that provides s professional essay writing service for students.

“The research shows basic acceptance remains elusive,” a statement released by the company says. “Thirty percent of LGBTQ+ people are concerned they will face discrimination if they come out at work, while 1 in 4 fear for their safety,” the statement says. “Alarmingly, 2 in 5 have had their orientation or identity disclosed without consent.”

Avery Morgan, an EduBirdie official, says in the statement, “Despite progress in LGBTQ+ human rights, society stigma persists. Our findings show 70% of LGBTQ+ people feel lonely, misunderstood, marginalized, or excluded at work, and 59% believe their sexual orientation or gender identity has hindered their careers.”

According to Morgan, “One of the biggest challenges businesses should be aware of is avoiding tokenism and appearing inauthentic in their actions. Employers must be genuine with their decisions to bring a more diverse workforce into the organization.”

The report includes these additional findings:

• 44% of LGBTQ people responding to the survey said they have quit a job due to lack of acceptance.

• 15% reported facing discrimination “going unaddressed” by their employer.

• 21% “choose not to report incidents that occur at work.”

• 44% of LGBTQ+ workers feel their company is bad at raising awareness about their struggles.

• Half of LGBTQ+ people change their appearance, voice, or mannerisms to fit in at work.

• 56% of LGBTQ+ people would be more comfortable coming out at work if they had a more senior role.

At least 32 states and the District of Columbia have passed laws banning employment discrimination based on sexual orientation and gender identity, according to the Human Rights Campaign. The EduBirdie report does not show which states participants of the survey are from. EduBirdie spokesperson Anna Maglysh told the Washington Blade the survey was conducted anonymously to protect the privacy of participants.

The full report can be accessed here.

Research/Study

2024 GLAAD Social Media Safety Index: Social media platforms fail

Despite moderate score improvements since 2023 on LGBTQ safety, privacy, and expression, all platforms insufficiently protect LGBTQ users

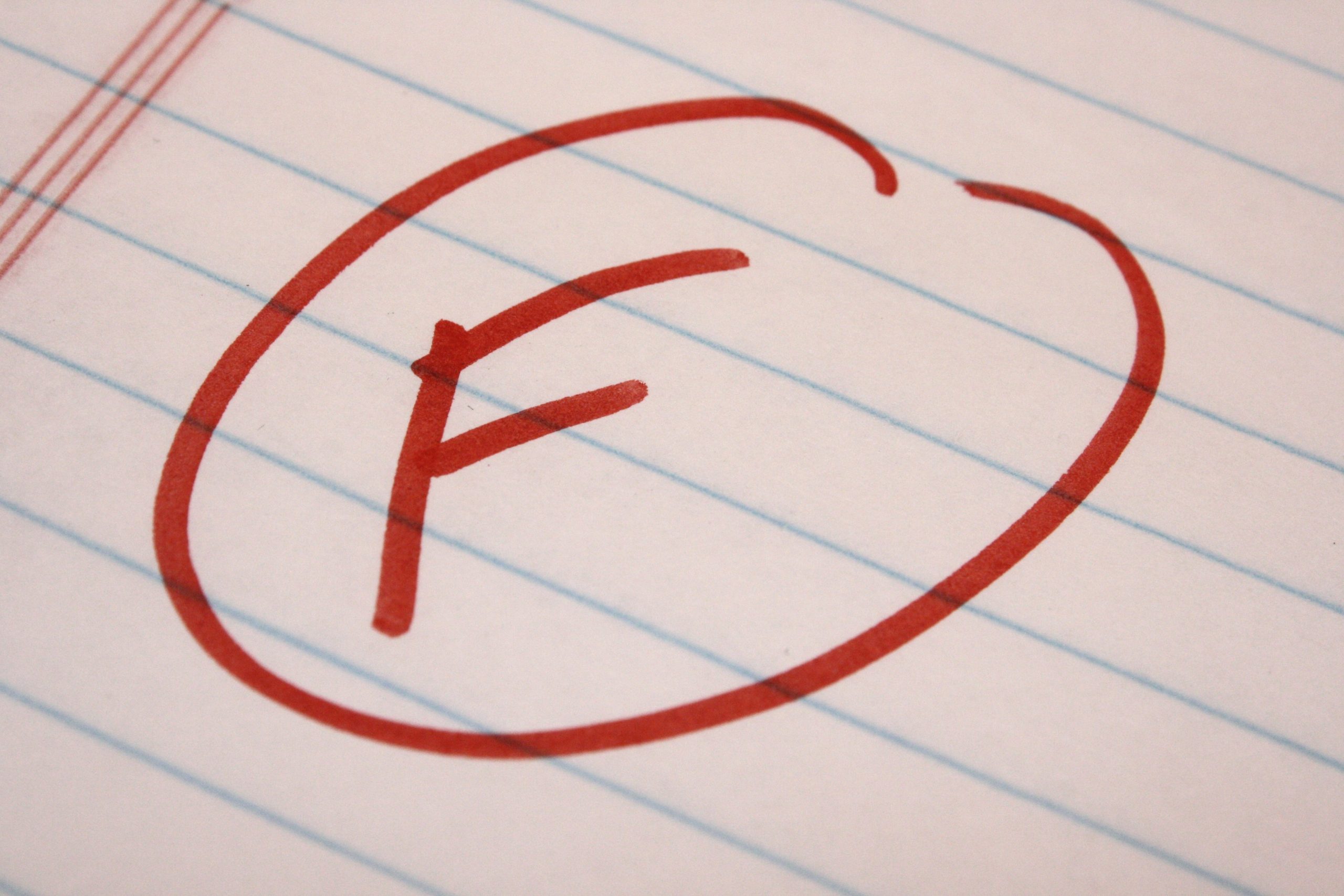

NEW YORK – GLAAD released its fourth annual Social Media Safety Index (SMSI) on Tuesday giving virtually every major social media company a failing grade as it surveyed LGBTQ safety, privacy, and expression online.

According to GLAAD, the world’s largest LGBTQ+ media advocacy organization, YouTube, X/Twitter, and Meta’s Facebook, Instagram, and Threads – received failing F grades on the SMSI Platform Scorecard for the third consecutive year.

The only exception was Chinese company ByteDance owned TikTok, which earned a D+.

Some platforms have shown improvements in their scores since last year. Others have fallen, and overall, the scores remain abysmal, with all platforms other than TikTok receiving F grades.

● TikTok: D+ — 67% (+10 points from 2023)

● Facebook: F — 58% (-3 points from 2023)

● Instagram: F — 58% (-5 points from 2023)

● YouTube: F — 58% (+4 points from 2023)

● Threads: F — 51% (new 2024 rating)

● Twitter: F — 41% (+8 points from 2023)

This year’s report also illuminates the epidemic of anti-LGBTQ hate, harassment, and disinformation across major social media platforms, and especially makes note of high-follower hate accounts and right-wing figures who continue to manufacture and circulate most of this activity.

“In addition to these egregious levels of inadequately moderated anti-LGBTQ hate and disinformation, we also see a corollary problem of over-moderation of legitimate LGBTQ expression — including wrongful takedowns of LGBTQ accounts and creators, shadowbanning, and similar suppression of LGBTQ content. Meta’s recent policy change limiting algorithmic eligibility of so-called ‘political content,’ which the company partly defines as: ‘social topics that affect a group of people and/or society large’ is especially concerning,” GLAAD’s Senior Director of Social Media Safety Jenni Olson said in the press release annoucing the report’s findings.

Specific LGBTQ safety, privacy, and expression issues identified include:

● Inadequate content moderation and problems with policy development and enforcement (including issues with both failure to mitigate anti-LGBTQ content and over-moderation/suppression of LGBTQ users);

● Harmful algorithms and lack of algorithmic transparency; inadequate transparency and user controls around data privacy;

● An overall lack of transparency and accountability across the industry, among many other issues — all of which disproportionately impact LGBTQ users and other marginalized communities who are uniquely vulnerable to hate, harassment, and discrimination.

Key Conclusions:

● Anti-LGBTQ rhetoric and disinformation on social media translates to real-world offline harms.

● Platforms are largely failing to successfully mitigate dangerous anti-LGBTQ hate and disinformation and frequently do not adequately enforce their own policies regarding such content.

● Platforms also disproportionately suppress LGBTQ content, including via removal, demonetization, and forms of shadowbanning.

● There is a lack of effective, meaningful transparency reporting from social media companies with regard to content moderation, algorithms, data protection, and data privacy practices.

Core Recommendations:

● Strengthen and enforce existing policies that protect LGBTQ people and others from hate, harassment, and misinformation/disinformation, and also from suppression of legitimate LGBTQ expression.

● Improve moderation including training moderators on the needs of LGBTQ users, and moderate across all languages, cultural contexts, and regions. This also means not being overly reliant on AI.

● Be transparent with regard to content moderation, community guidelines, terms of service policy implementation, algorithm designs, and enforcement reports. Such transparency should be facilitated via working with independent researchers.

● Stop violating privacy/respect data privacy. To protect LGBTQ users from surveillance and discrimination, platforms should reduce the amount of data they collect, infer, and retain. They should cease the practice of targeted surveillance advertising, including the use of algorithmic content recommendation. In addition, they should implement end-to-end encryption by default on all private messaging to protect LGBTQ people from persecution, stalking, and violence.

● Promote civil discourse and proactively message expectations for user behavior, including respecting platform hate and harassment policies.

Read the report here: (Link)

Research/Study

The Daily Wire: New vitamins will boost sperm & fight “wokeness”

Marketing for The Daily Wire’s venture tries to cash in on fear of trans people & drag queens promoting an alternative to “woke” companies

By Mia Gingerich | WASHINGTON – The Daily Wire announced the launch of a new “men’s lifestyle” company named Responsible Man on May 1, promoting its only current product — a men’s dietary supplement that it says is “designed to help … sharpen brain cognition” and that it suggests will help address what the outlet calls the “increasing health risk” of declining “sperm concentration.”

On April 30, The Daily Wire’s parent company Bentkey Ventures registered the assumed name “Daily Wire Ventures.” The next day, on May 1, it debuted Responsible Man, a new company for men’s health products.

The Daily Wire is promoting Responsible Man as an alternative to “woke” companies and by fearmongering about some of the outlet’s frequent targets, namely gender-affirming care and drag queens, asking its readers, “Do you want to buy your men’s health products from a company that partners with drag queens and supports radical organizations that push gender procedures on children?” Responsible Man’s website uses similar language, promising its customers that “together, we can reclaim masculinity” and claiming that “Emerson’s Vitamins are a simple step towards improving yourself, creating order, and building the future.”

Ad from Responsible Man’s website:

The Daily Wire’s promotion suggests Responsible Man’s products can help address various health issues, including the purported “increasing health risk” of declining “sperm concentration” worldwide, promising to help men stay healthy “for the survival of the human race.”

The company’s only product, a men’s multivitamin, is marketed as being “professionally engineered by medical doctors” to “support your immune system, maintain energy production, sharpen brain cognition, and support the health of your heart and muscles.”

Claims made by The Daily Wire’s new company are not FDA-approved

According to disclaimers on Responsible Man’s website, the claims made to promote the company’s vitamins “have not been evaluated by the Food and Drug Administration.” Multivitamins do not need to go through an evaluation process prior to entering the marketplace, and have generally proved ineffective in reducing the risk of heart disease and mental decline.

In the past, The Daily Wire has targeted certain medications used in gender-affirming care for trans youth for their use off-label without FDA approval, even though this is a common practice in prescribing pediatric medications. The Daily Wire’s Matt Walsh has been particularly fervent in wielding this point to target gender-affirming care.

The Daily Wire is promoting the new company by targeting Men’s Health magazine

The Daily Wire’s previous ventures into consumer goods have been framed in opposition to specific companies it deemed too “woke,” such as Harry’s Razors and Hershey’s Chocolate, for refusing to advertise with The Daily Wire and featuring a trans woman in an advertisement, respectively. (Jeremy’s Razors and Jeremy’s Chocolate, The Daily Wire’s answers to Harry’s and Hershey’s going “woke,” have received poor feedback from customers.)

The Daily Wire’s promotion of Responsible Man singles out for criticism Men’s Health, the largest men’s lifestyle magazine in the United States. Claiming that Men’s Health was “afraid of manhood itself,” The Daily Wire has declared itself “here to give you a better option.” The lone source of outrage cited by the outlet is a Men’s Health article from November 2021 on “LGBTQ+ Language and Media Literacy.”

******************************************************************************************

Mia Gingerich is a researcher at Media Matters. She has a bachelor’s degree in politics and government from Northern Arizona University and has previously worked in rural organizing and local media.

The preceding article was previously published by Media Matters for America and is republished by permission.

Research/Study

Half of LGBTQ+ college faculty considered moving to another state

Half of LGBTQ+ college faculty surveyed have considered moving to another state because of anti-DEI laws the Williams Institute found

LOS ANGELES – Anti-diversity, equity, and inclusion (DEI) laws have negatively impacted the teaching, research, and health of LGBTQ+ college faculty, according to a new study by the Williams Institute at UCLA School of Law.

As a result of anti-DEI laws, about half of the LGBTQ+ faculty surveyed (48%) have explored moving to another state, and 20% have actively taken steps to do so. One-third (36%) have considered leaving academia altogether.

Nine states have passed anti-DEI legislation related to higher education, and many others are considering similar legislation.

Using data gathered from 84 LGBTQ+ faculty, most of whom work at public universities, this study examined how the anti-DEI and anti-LGBTQ+ climate has affected their teaching, lives outside the classroom, emotional and physical health, coping strategies, and desire to move.

Many faculty reported that anti-DEI laws have negatively impacted what they teach, how they interact with students, their research on LGBTQ+-related issues, and how out they are on campus and in their communities. More than one in ten faculty surveyed have faced requests for their DEI-related activities from campus administrators (14%), course enrollment declines (12%), and student threats to report them for violating anti-DEI laws (10%).

Nearly three-quarters (74%) of the LGBTQ+ faculty said the current environment has taken a toll on their mental health, and over one-quarter (27%) said it has affected their physical health.

Some LGBTQ+ faculty, particularly those who were tenured, part of a union, or well-respected on campus, have responded to anti-DEI policies by becoming more involved in advocacy and activism on (33%) and off campus (26%). Some made positive changes to their teaching, such as adding readings that provide context for LGBTQ+ content and expanding the amount of discussion during class.

“These findings suggest that anti-DEI laws could lead to significantly fewer out LGBTQ+ faculty, less course coverage of LGBTQ+ topics, and a lack of academic research on LGBTQ+ issues,” said study author Abbie E. Goldberg, Affiliated Scholar at the Williams Institute and Professor of Psychology at Clark University. “This could create a generation of students with less exposure to LGBTQ+ issues and faculty mentorship and support.”

ADDITIONAL FINDINGS:

- About 30% of participants said that their college/university communities were conservative or very conservative on LGBTQ+ issues.6% said that they had experienced harassment or been bothered by supervisors or colleagues due to their LGBTQ+ status, political affiliation, or perceived “wokeness” in the last six months.20% said that they were scared of this type of harassment.

- Nearly 30% of participants said that their home communities were conservative or very conservative on LGBTQ+ issues.5% said that they had experienced harassment or been bothered by neighbors due to their LGBTQ+ status, political affiliation, or perceived “wokeness” in the last six months.37% said that they were scared of this type of harassment.

- Over 60% of survey participants who were parents reported at least one adverse event or change had impacted their children in the past six months, including bullying and harassment (26%), removal of books from classrooms (18%), and curriculum changes (35%).

Read the report here (Link)

Research/Study

Landmark systematic review of trans surgery

Landmark systematic review concluded regret rate for trans surgeries is “remarkably low,” compared to other surgeries & major life decisions

By Erin Reed | WASHINGTON – In recent years, anti-transgender activists have used fear of “regret” as justification to ban gender-affirming care for transgender youth and restrict it for many adults. Now, a new systematic review published in The American Journal of Surgery has concluded that the rate of regret for transgender surgeries is “remarkably low.”

The review encompasses more than 55 individual studies on regret to support its conclusions and will likely be a powerful tool in challenging transgender bans in the coming weeks.

The study, conducted by experts from the University of Wisconsin School of Medicine and Public Health, examines reported regret rates for dozens of surgeries as well as major life decisions and compares them to the regret rates for transgender surgeries.

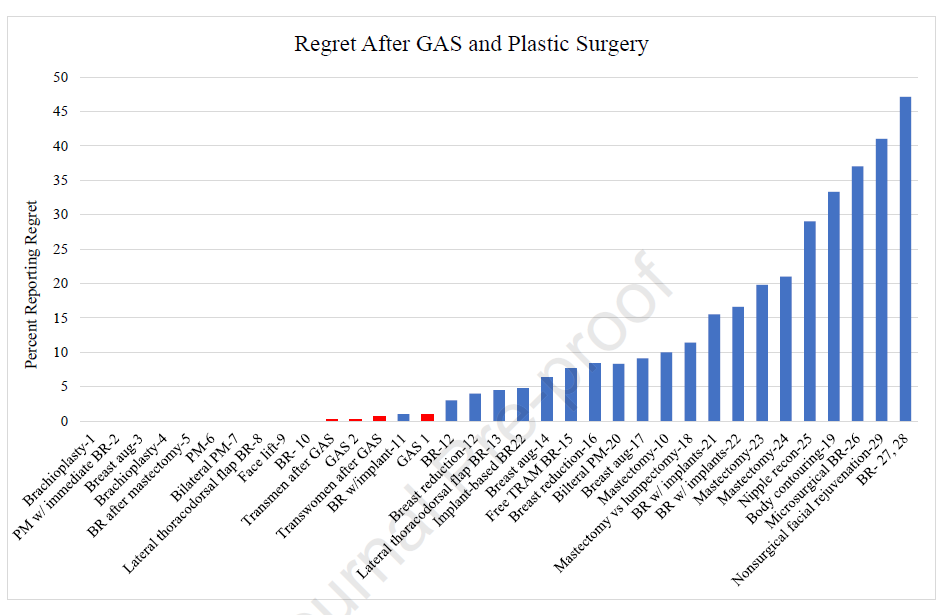

It finds that “there is lower regret after [gender-affirming surgery], which is less than 1%, than after many other decisions, both surgical and otherwise.” It notes that surgeries such as tubal sterilization, assisted prostatectomy, body contouring, facial rejuvenation, and more all have regret rates more than 10 times as high as gender-affirming surgery.

You can see regret rates for many of the surgeries they examined in the review here:

The review also finds that regret rates for gender-affirming surgeries are lower than those for many life decisions. For instance, the survey found that marriage has a regret rate of 31%, having children has a regret rate of 13%, and at least 72% of sexually active students report regret after engaging in sexual activity at least once. All of these are notably magnitudes higher than gender affirming surgery.

Regret is commonly weaponized against transgender care. The recently released Cass Review, currently being used in an attempt to ban transgender care in England, mentions “regret” 20 times in the document. Pamela Paul’s story in The New York Times features stories of regret heavily and objects to reports of low regret rates. Legislators use the myth of high levels of regret to justify harsh crackdowns on transgender care.

Recently, though, anti-trans activists who have pushed the idea that regret may be high appear to be retreating from their claims. In the WPATH Files, a highly editorialized and error-filled document targeting the World Professional Association for Transgender Health, the authors state that the low levels of regret for transgender people obtaining surgery are actually cause for alarm, and that transgender people are “suspiciously” happy.

The idea that transgender people cannot be trusted to report their own happiness and regret has also been echoed by anti-transgender activists and influencers like Matt Walsh and Jesse Singal.

The review has sharp critiques for those who use claims of “regret” to justify bans on gender affirming care: “Unfortunately, some people seek to limit access to gender-affirming services, most vehemently gender-affirming surgery, and use postoperative regret as reason that care should be denied to all patients. This over-reaching approach erases patient autonomy and does not honor the careful consideration and multidisciplinary approach that goes into making the decision to pursue gender-affirming surgery… [other] operations, while associated with higher rates of post-operative regret, are not as restricted and policed like gender-affirming surgery.”

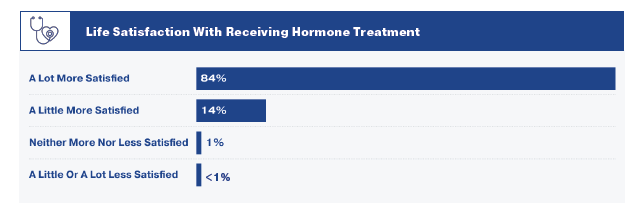

The review is in line with recent data supporting very low regret rates for transgender people. The 2022 U.S. Transgender Survey, the world’s largest survey of transgender individuals, which surveyed over 90,000 transgender people, found that for those receiving hormone therapy, regret rates are incredibly low: less than 1% report being a little or a lot less satisfied after beginning hormone therapy.

You can view a chart from the 2022 US Transgender Survey showing low rates of regret for hormone therapy here:

There is no evidence that transgender people experience high rates of regret for any transgender care, including transgender surgery. On the contrary, gender-affirming care saves lives.

A Cornell review of more than 51 studies found that gender-affirming care significantly improves the well-being of transgender individuals and also concluded that regret is rare. Low rates of regret for transgender people are not “suspicious.” Rather, they are evidence that the care transgender people seek is important, carefully provided, and helps them live more fulfilled lives.

****************************************************************************

Erin Reed is a transgender woman (she/her pronouns) and researcher who tracks anti-LGBTQ+ legislation around the world and helps people become better advocates for their queer family, friends, colleagues, and community. Reed also is a social media consultant and public speaker.

******************************************************************************************

The preceding article was first published at Erin In The Morning and is republished with permission.

Research/Study

90 percent of trans youth live in states restricting their rights

Slightly more than 75% of trans youth live in 40 states passed laws or had pending bills that restrict access to gender-affirming care

LOS ANGELES – According to a new report by the Williams Institute at UCLA School of Law, 93% of transgender youth aged 13 to 17 in the U.S.—approximately 280,300 youth—live in states that have proposed or passed laws restricting their access to health care, sports, school bathrooms and facilities, or the use of gender-affirming pronouns.

In some regions, a large percentage of transgender youth live in a state that has already enacted one of these laws. About 85% of transgender youth in the South and 40% of transgender youth in the Midwest live in one of these states.

An estimated 300,100 youth ages 13 to 17 in the U.S. identify as transgender. Nearly half of transgender youth live in 14 states and Washington D.C. that have laws that protect access to gender-affirming care and prohibit conversion therapy.

All transgender youth living in the Northeast reside in a state with either a gender-affirming care “shield” law or a conversion therapy ban, while almost all transgender youth in the West (97%) live in a state with one or both protective laws.

“For the second straight year, hundreds of bills impacting transgender youth were introduced in state legislatures,” said lead author Elana Redfield, Federal Policy Director at the Williams Institute. “The diverging legal landscape has created a deep divide in the rights and protections for transgender youth and their families across the country.”

KEY FINDINGS:

Restrictive Legislation

Bans on gender-affirming care

| 237,500 transgender youth—slightly more than three-quarters of transgender youth in the U.S.—live in 40 states that have passed laws or had pending bills that restrict access to gender-affirming care.113,900 transgender youth live in 24 states that have enacted gender-affirming care bans.123,600 youth live in 16 additional states that had a gender-affirming care ban pending in the 2024 legislative session. |

Bans on sports participation

| 222,500 transgender youth—nearly three-quarters of transgender youth in the U.S.—live in 41 states that have passed laws or had pending bills that restrict participation in school sports.120,200 transgender youth live in 27 states where access to sports participation is restricted or state policy encourages restriction.102,300 transgender youth live in 14 additional states that had a sports ban pending in the 2024 legislative session. |

School bathroom bans

| 117,000 transgender youth live in 30 states that have passed laws or had pending bills that ban transgender students from using school bathrooms and other facilities that align with their gender identity.38,600 transgender youth live in 13 states that explicitly or implicitly ban bathroom access.78,400 transgender youth live in 17 additional states that had a bathroom ban pending in the 2024 legislative session. |

Bans on pronoun use

| 121,100 transgender youth live in 31 states that have passed laws or had pending bills that restrict or prohibit the use of gender-affirming pronouns.49,100 transgender youth live in 14 states that have restricted or banned pronoun use, particularly in schools or state-run facilities.72,000 transgender youth live in 17 additional states that had a restriction or prohibition pending in the 2024 legislative session. |

Gender-affirming care “shield” laws

| 163,800 transgender youth—over half of transgender youth in the U.S.—live in 18 states and D.C. that have passed gender-affirming care “shield” laws or had pending bills that protect access to care.146,700 transgender youth live in 14 states and D.C. that have passed these protections.17,100 transgender youth live in four additional states that had a “shield” law pending in the 2024 legislative session. |

Conversion therapy bans

| 204,800 transgender youth live in 31 states and D.C. that ban conversion therapy or had pending bills that prohibit the practice for minors.198,000 transgender youth—about two-thirds of transgender youth in the U.S.—live in 27 states and D.C. that ban conversion therapy for minors.6,800 transgender youth live in four additional states that had a ban pending in the 2024 legislative session. |

| “A growing body of research shows that efforts to support transgender youth are associated with better mental health,” said co-author Kerith Conron, Research Director at the Williams Institute. “Restrictions on medically appropriate care and full participation at school exacerbate the stress experienced by these youth and their families.” |

Read the report: (Here)

Research/Study

Same-sex couples vulnerable to negative effects of climate change

Same-sex couple households disproportionately live in coastal areas, cities & areas with poorer infrastructure and less access to resources

LOS ANGELES – A new report by the Williams Institute at UCLA School of Law finds that same-sex couples are at greater risk of experiencing the adverse effects of climate change compared to different-sex couples.

LGBTQ people in same-sex couple households disproportionately live in coastal areas and cities and areas with poorer infrastructure and less access to resources, making them more vulnerable to climate hazards.

Using U.S. Census data and climate risk assessment data from NASA and the Federal Emergency Management Agency (FEMA), researchers conducted a geographic analysis to assess the climate risk impacting same-sex couples. NASA’s risk assessment focuses on changes to meteorological patterns, infrastructure and built environment, and the presence of at-risk populations. FEMA’s assessment focuses on changes in the occurrence of severe weather events, accounting for at-risk populations, the availability of services, and access to resources.

Results show counties with a higher proportion of same-sex couples are, on average, at increased risk from environmental, infrastructure, and social vulnerabilities due to climate change.

“Given the disparate impact of climate change on LGBTQ populations, climate change policies, including disaster preparedness, response, and recovery plans, must address the specific needs and vulnerabilities facing LGBTQ people,” said study co-author Ari Shaw, Senior Fellow and Director of International Programs at the Williams Institute. “Policies should focus on mitigating discriminatory housing and urban development practices, making shelters safe spaces for LGBT people, and ensuring that relief aid reaches displaced LGBTQ individuals and families.”

“Factors underlying the geographic vulnerability are crucial to understanding why same-sex couples are threatened by climate change and whether the findings in our study apply to the broader LGBTQ population,” said study co-author Lindsay Mahowald, Research Data Analyst at the Williams Institute. “More research is needed to examine how disparities in housing, employment, and health care among LGBT people compound the geographic vulnerabilities to climate change.”

Read the report

Research/Study

Right-wing pastor & podcast host: LGBTQ movement equals Hitler

Podcast host and Ohio county commissioner nominee has pushed baseless conspiracy theories and compared the LGBTQ movement to Hitler

By Payton Armstrong | WASHINGTON – Right-wing pastor and podcast host Drenda Keesee, who is running uncontested in November for a Knox County, Ohio, commissioner seat, has spread unhinged conspiracy theories about climate change, abortion, “satanic hordes” causing people to identify as LGBTQ, and global elites working to bring about a “New World Order.”

Notably, Keesee has claimed that solar farms are part of a plot to “create” food and energy shortages, said LGBTQ people “sentence themself to hell,” compared the LGBTQ movement to Adolf Hitler, and labeled the feminist movement an “occultic agenda” to “get women to fight to kill their children.” Keesee is also a proponent of the “Seven Mountain Mandate,” a theological approach that calls on Christians to impose fundamentalist values on all aspects of American life.

Keesee is running unopposed in November to be a Knox County commissioner after winning her primary on an anti-solar farm platform. Several local media reports have failed to document Keesee’s extreme rhetoric and views, including one from the local NPR affiliate covering her primary win.

Below are several examples of Keesee spreading extreme conspiracy theories about LGBTQ people, a “New World Order,” climate change, and abortion.

Keesee has pushed bigotry and conspiracy theories about LGBTQ people, including that “satanic hordes” and “demonic spirits” cause people to be trans

- Keesee claimed that “satanic hordes” and “demonic spirits” cause children to identify as trans and commit violence. Keesee warned that “children’s spirits” and souls are “at stake,” declaring that “demonic spirits are attacking them and satanic hordes are infiltrating them and even possessing their bodies, which is why we’re seeing more violence among youth, we’re seeing trans violence.” Keesee denied that people can be trans, saying that “you can change their hairstyle, you can do all kinds of surgeries on the outside, but it cannot change what God created a person.” [Drenda On Guard, 10/27/23]

From the October 27, 2023, edition of Drenda On Guard

- Keesee suggested that LGBTQ people are following “Satan’s agenda” and will be in “eternal hell” and “the lake of fire” if they don’t “repent” before Jesus returns. In a Facebook livestream, Keesee called it “abominations” and “Satan’s plan” “when a man lies with a man” and when people “experiment with bodies and change them from what God designed them to create — be created male and female,” seemingly in reference to gay and transgender people. She emphasized that when they “reject God and receive Satan’s agenda … They actually sentence themself to hell.” [Facebook, 9/7/21]

From a September 7, 2021, Facebook Live video

- Keesee called the LGBTQ movement “a cult” and gender-affirming care “hideous, occultic, satanic indoctrination.” During an episode of her podcast, Keesee recounted a story of a child questioning their sexual orientation and gender identity, claiming the child had been coached at school. Keesee claimed that one of the World Economic Forum’s “agendas” is to make children “question the most basic things of humanity,” including “whether they’re even male and female,” in order to “bring us into transhumanism.” [Drenda On Guard, 11/17/23]

- Keesee compared the LGBTQ movement to Adolf Hitler and said the movement is trying to “turn” children “against God and parents.” Keesee claimed that the LGBTQ movement is pushing its agenda “into early ages because just like Hitler, they know if you’re gonna mold a child, you mold them at the youngest age you can.” Keesee added that “it makes [her] want to put on [her] boxing gloves” because children are being “bombarded constantly with messaging that makes them question whether they’re a male or female.” She claimed that schools pressure kids to identify as LGBTQ through “propaganda” that is “introduced in their classroom every day — the rainbow movement, teachers wearing, you know, rainbow, questioning their gender in everyday conversations in school.” [Drenda On Guard, 11/17/23]

From the November 17, 2023, edition of Drenda On Guard

- Keesee claimed that Satan “is really the author” of LGBTQ inclusion and declared that support for LGBTQ people is a sign of “the last days.” Keesee lamented “this whole push of LGBTQ on our daughters and our sons,” and declared that it is “Satan who is really the author of this.” Keesee also said that she saw “a church with steps that were painted rainbow,” noting that, “Jesus said in the last days there would be great heresy, great apostasy … Satan is playing hard for the souls of men and women and especially children.” [Drenda On Guard, 12/15/23]

Keesee has promoted the “New World Order” conspiracy theory about a totalitarian world government, connecting it to LGBTQ inclusion and efforts to curb climate change

- According to the Institute for Strategic Dialogue, “Proponents of the ‘New World Order’ conspiracy believe a cabal of powerful elite figures wielding great political and economic power is conspiring to implement a totalitarian one-world government.” Conspiracy theorists frequently attribute global events such as the COVID-19 pandemic and climate change to the “New World Order.” The conspiracy theory also often incorporates antisemitic narratives.

- Keesee suggested that solar farms are part of a New World Order plot to “create” energy and food shortages. She claimed that solar farms “don’t produce crops” but “destroy the actual dirt and soil of the richest farmland in America,” declaring that “they do that because they’re trying to create a food shortage, so they can create an energy shortage.” Keesee assured her audience that “the globalists, in the end, will not get their way. A new world is coming, but it’s not going to be their great reset, their fourth industrial revolution, their New World Order. It’s going to be the king setting up his kingdom.” [Drenda On Guard, 3/29/24]

From the March 29, 2024, edition of Drenda On Guard

- Keesee claimed that abortion, “LGBTQ agendas,” critical race theory, and “the climate emergency” are part of the plot to “bring us into the New World Order” and “destroy” America. In an episode titled “They Want To Enslave Humanity?!” Keesee said that through critical race theory, abortion, and “LGBTQ agendas,” global elites are trying to “destroy” the nation “like Hitler did with Germany.” She claimed that elites are attempting to “bring us into the New World Order” and that “it’s not a conspiracy theory.” Keesee also claimed that there’s an agenda “to make government God” and “remove parents,” to “weaponiz[e] the children then against our country.” [Drenda On Guard, 11/17/23]

From the November 17, 2023, edition of Drenda On Guard

- Keesee said that the “climate agenda,” support for trans children, and porn addiction are part of an effort to “destroy the Republic of the United States of America in order to bring us into their New World Order, their great reset.” Keesee decried the affirmation of trans children, saying, “Transgendering, transitioning, gender-affirming, whatever — they keep changing the names and make it sound more and more beautiful and wonderful and affirming in love. It’s not love. It’s lust.” She claimed that “the climate agenda,” “the crisis of pornography,” and “transgendering” are “tied to how they bring about the New World Order” to “bring down free nations, and get them to give up their freedom, and their freedom over their children.” [Drenda On Guard, 10/27/23]

From the November 17, 2023, edition of Drenda On Guard

- Keesee said that the “climate agenda,” support for trans children, and porn addiction are part of an effort to “destroy the Republic of the United States of America in order to bring us into their New World Order, their great reset.” Keesee decried the affirmation of trans children, saying, “Transgendering, transitioning, gender-affirming, whatever — they keep changing the names and make it sound more and more beautiful and wonderful and affirming in love. It’s not love. It’s lust.” She claimed that “the climate agenda,” “the crisis of pornography,” and “transgendering” are “tied to how they bring about the New World Order” to “bring down free nations, and get them to give up their freedom, and their freedom over their children.” [Drenda On Guard, 10/27/23]

Keesee is a proponent of the Christian nationalist “Seven Mountain Mandate”

- The “Seven Mountain Mandate” is a “quasi-biblical blueprint for theocracy” that asserts that Christians must impose fundamentalist values on American society by conquering the “seven mountains” of cultural influence in U.S. life: government, education, media, religion, family, business, and entertainment. Several Republican public officials have come under scrutiny for their connections to the Seven Mountain Mandate, including House Speaker Mike Johnson (R-LA) and Alabama Supreme Court Chief Justice Tom Parker.

- Keesee has made the Seven Mountain Mandate central to her commentary in right-wing media. Right Wing Watch reported that “Keesee’s main focus” is on “promoting Seven Mountains Dominionism,” and highlighted various instances in which Keesee has pushed the Seven Mountain Mandate. In a recent appearance on the Christian nationalist program FlashPoint, for example, Keesee claimed that the “hand of God” was responsible for her victory because Christians must take “our place in the [seven] mountains of influence and leadership” in order to save America. [Right Wing Watch, 3/26/24]

- Keesee is the author of Fight Like Heaven!, which lays out the Seven Mountain Mandate and “shows you precisely how to fight like heaven, kick hell out, and take back these mountains for the Kingdom of God!,” per the book’s description on Amazon. The Amazon description notes that the book “identifies the Seven Mountains of Influence that the Antichrist spirit has invaded.” [Amazon, accessed 4/5/24]

- Keesee has also repeatedly promoted the Seven Mountain Mandate on social media and encouraged followers to “take the mountains to influence others for Christ.” For example, last summer, Keesee wrote: “We have a choice: give control to God or the adversary. The seven mountains—government, economy, health, education, media, and family—can be influenced by either force. Let’s unite as a church, conquer each mountain with grace, and reclaim them for God’s Kingdom!” [Twitter/X, 6/26/22, 6/13/23]

Keesee said the feminist movement is a “demonic, occultic agenda” to “get women to fight to kill their children”

- Keesee said that feminism “shakes its fist in the face of God” and suggested women should come “under the covering of men.” Keesee said that feminism makes women selfish and invoked Satan, saying, “It is a selfishness that says, just like Satan said in Isaiah 9 … ‘I’ll make my throne above God’s throne,’ and it is us enthroning ourselves.” Keesee also expressed agreement with her guest that feminism is a “perversion of God’s word,” and went on to complain that “women don’t know how to be a woman of God that comes under the covering of men.” [Drenda On Guard, 5/12/23]

- Keesee called the feminist movement and abortion a “hideous, demonic, occultic agenda” to “get women to fight to kill their children.” Keesee called feminism “demonic to the core” and asserted that “Satan wants to divide the male, the female, emasculate the men, remove them as the protector — the defender, the strong voice, to protect their kids — and get women to want to attack their own God-given right to bear children.” [Drenda On Guard, 11/17/23]

From the November 17, 2023, edition of Drenda On Guard

******************************************************************************************

The preceding article was previously published by Media Matters for America and is republished with permission.

-

Breaking News5 days ago

Breaking News5 days agoMajor victory for LGBTQ funding in LA County

-

Features5 days ago

Features5 days agoKoaty & Sumner: Finding love in the adult industry

-

Commentary3 days ago

Commentary3 days agoBreaking the mental health mold with Ketamine: insights from creator of Better U

-

Miscellaneous4 days ago

Miscellaneous4 days agoCan you really find true love in LA? Insights from a queer matchmaker

-

Arts & Entertainment4 days ago

Arts & Entertainment4 days agoIntuitive Shana gives us her hot take for July’s tarot reading

-

Movies1 day ago

Movies1 day agoTwo new documentaries highlight trans history