Online Culture

TikTok announces change in algorithm to prevent “content holes”

The company explained that “certain kinds of videos can sometimes inadvertently reinforce a negative personal experience for some viewers

CULVER CITY, Ca. – TikTok, the Chinese owned video-sharing app that allows users to create and share 15-second videos, on any topic, announced this week that the company was altering its ‘For You’ recommendation system (feed) algorithm.

TikTok with its 1 billion plus users is one of the biggest social media networks globally. The company explained that “certain kinds of videos can sometimes inadvertently reinforce a negative personal experience for some viewers, like if someone who’s recently ended a relationship comes across a breakup video.”

The goal according to a company spokesperson is to prevent harmful “content holes,” whereby the system may inadvertently be recommending only very limited types of content that, though not violative of TikTok’s policies, could have a negative effect if that’s the majority of what someone watches such as content about loneliness.

Dr. Eiji Aramaki, a professor at the Nara Institute of Science and Technology, (NAIST), located in Ikoma, Osaka Prefecture, Japan, whose background is working on information science, explained that content holes are created when in community-type content such as those created on a social media platform exploits the user’s unawareness of information.

Then as the user seeks more similar content, the feed algorithm manipulation creates a “content hole search.”

According to TikTok’s explanation of how its ‘For You’ feed works, recommendations are based on a number of factors, including things like:

User interactions such as the videos you like or share, accounts you follow, comments you post, and content you create.

Video information, which might include details like captions, sounds, and hashtags.

Device and account settings like your language preference, country setting, and device type. These factors are included to make sure the system is optimized for performance, but they receive lower weight in the recommendation system relative to other data points we measure since users don’t actively express these as preferences.

The company is insisting that its recommendation system is also designed with safety as a consideration, ensuring that; “In addition to removing content that violates our Community Guidelines, we try not to recommend certain categories of content that may not be appropriate for a general audience.”

Social media companies, especially those with a younger user base such as TikTok, Snapchat, and Instagram are under increasing pressure to implement greater safeguards to stave off harmful content.

Last week, Instagram’s Head Adam Mosseri testifying before the Senate Commerce Committee’s Subcommittee on Consumer Protection, was grilled by senators angered over public revelations of how the photo-sharing platform can harm some young users.

This past September in a report by The Wall Street Journal, based on internal research leaked by a whistleblower at Facebook, it was revealed that for some of the Instagram-devoted teens, the peer pressure generated by the visually focused app led to mental-health and body-image problems, and in some cases, eating disorders and suicidal thoughts.

It was Facebook’s own researchers who alerted the social network giant’s executives to Instagram’s destructive potential.

In July, Media Matters conducted independent research into TikTok’s “For You” page recommendation algorithm that circulated videos promoting hate and violence targeting the LGBTQ community during Pride Month, while the company celebrated the month with its #ForYourPride campaign.

The active spread of explicitly anti-LGBTQ videos isn’t a new problem for TikTok, but it appears that the platform has yet to stop it — even though the company claims to prohibit discriminatory and hateful content targeting sexual orientation and gender identity. TikTok also posted an update in early June celebrating Pride Month and promising to “foster a welcoming environment” and “remove hateful, anti-LGBTQ+ content or accounts that attempt to bully or harass people on our platform.” Given the content being circulated by the algorithm once a user begins interacting with anti-LGBTQ videos, it is clear that TikTok has yet to fulfill these promises.

This week’s announcement by TikTok is seen by some tech/online web industry observers as an effort by the company to change the system from within avoiding the public outcry and resulting reactions from lawmakers that could lead to more restrictive oversight and regulation.

Social Media Platforms

Queer Mercado taking steps to right their wrongs

As part of that action plan, the Mercado released a survey to the community to gain a better understanding of community needs going forward

Earlier this year, the organization expressed transphobic remarks on social media through the Queer Mercado Instagram account. The co-founder Diana Díaz, says she trusted the wrong person to run that account and represent the Queer Mercado and also says that the person who made the comment didn’t realize they were commenting through the brand’s account.

Díaz says she believes that she is now making better decisions to benefit Queer Mercado and continue nurturing it, so it can continue growing. She is open to conversations regarding the event and how to make it a safer space for the communities involved.

In an interview with Díaz, she said she was inspired to create this space because as a school counselor for K-12 public school education in Boyle Heights, she was the first person that parents would go to when their child would come out as queer. Her students trusted her as an ally to go to when they felt like they needed support as queer and trans children.

“I worked at all the local school districts as a school counselor and I got to see how the family would react to their child coming out and it was very painful and very personal to me because I love these kids,” said Díaz.

She said that she couldn’t understand why so many of those parents reacted the way they did, knowing that these children were perfectly healthy and only looking for safety and support during a difficult and confusing time.

Although Díaz admits that she is not part of the LGBTQ+ community, she has strong ties to the community as an ally for children who have to not only deal with coming out and coming to terms with their identities, but who also have to deal with the extra burden of coming out within the Latinx community, which often reinforces misogyny, homophobia and transphobia.

Under this particularly hostile administration, it is rare to find an ally like Díaz, who not only stands up for the most vulnerable members of the LGBTQ+ community, but who also tirelessly works to make spaces like Queer Mercado, where the families of those children can feel welcome to explore these identities and this community in a way that is inclusive of all ages. Díaz says she made this space for the Latinx families and parents of LGBTQ+ children in an effort to build stronger relationships.

“I recruited artists and other volunteers to help me start the market,” said Díaz. “I didn’t see a problem with an ally doing it because there is no other free public space for queer families like [Queer Mercado].”

She also notes that this is the only space designated for art and community, specifically catered to the Latinx community in a city with one of the largest demographics of queer and trans Latinx people.

“I know not every grandma is going to be able to go to The Abbey, you know in West Hollywood, or Precinct. Some of us like to go to bed early.”

Díaz was first the founder of Goddess Mercado and says that when she started it, one of her students asked her about creating a space for LGBTQ+ families and this is when she thought of creating the Queer Mercado. She saw the need for this space and realized she could be the person to bring the representation that was needed.

Díaz comes from a family who made their living as vendors at swapmeets and other community spaces, so a space like this for her is deeply personal.

ChiChi LaPinga, multi-hyphanated activist and community leader in queer and trans spaces, was recently hired as Director of Outreach for the Queer Mercado. They are now in charge of facilitating ideas about how to better the Mercado and make the space as safe as possible for everyone who identifies as a member of the queer and trans communities.

Earlier this year when the Queer Mercado was caught up in this issue, many community members, vendors and attendees who avidly supported the event, said they no longer wanted to support it, if Díaz didn’t step down. Díaz founded the event and continues to believe that she can do more to bridge the gap between hostile families and their queer and trans children, by continuing her efforts as founder.

What she now says, is that she needs to take steps to gain community trust back by bringing in people who are willing and able to learn, grow, evolve and make the space better than ever.

As part of that action plan, the Mercado released a survey to the community in February to gain a better understanding of community needs going forward.

“I think that this [incident] is a perfect example of why there needs to be queer people in positions of leadership – so that people who aren’t part of the queer community like Diana, are guided through the process,” said ChiChi LaPinga.

ChiChi LaPinga is a Mexican, trans and nonbinary community leader and activist in Los Angeles who has built a reputation throughout years, working and representing the queer, trans and Latinx communities.

They say that people like Díaz should be putting people who are queer, who are part of the community, in these positions of influence and power and this incident proves why that is so important and crucial to a space like this.

“It was a very unfortunate situation. It was an error made by ignorance and something that I personally do not condone, right me as a transgender, non binary person, as a decent basic, you know, as a decent human being,” said ChiChi La Pinga. “I am also not the expert on all things, and I rely on my community to educate me on those things, and that is what all allies should do.”

Starting in March, and going forward, ChiChi LaPinga said they have pushed for there to be more panel discussions incorporated into the events where they can discuss issues that affect the community from different perspectives.

“One of the changes that I’ve always wanted to see at the Queer Mercado was to have panel discussions on stage, which is something that I introduced last month and am continuing this month,” said ChiChi LaPinga.

In our candid conversation, ChiChi LaPinga opened up about their own identity and struggles with embracing their identities within a culture that is misogynistic, homophobic and transphobic. They say they understand the community response and push-back for change in leadership, because Queer Mercado should be run by people who are inclusive and accepting of all identities within the LGBTQ+ umbrella.

However, Díaz says she founded the mercado, which is why she hopes to continue leading it, but in a new way that incorporates new voices into conversations about how to move forward.

She saw a need for a space like this and made it happen for her students and their families. She says she hopes that the conversations can continue to help her make better decisions going forward.

Ultimately, ChiChi LaPinga advices the community to make the decision to return to Queer Mercado on their own and only if they feel ready to do so.

“If you do not feel safe in certain spaces, make the decision that is best for you, because I would do the same,” said ChiChi LaPinga.

Online Culture

PregnantTogether unites LGBTQ+ parents under one domain

The Trump administration will not stop LGBTQ+ couples from starting families

For many years, members of the LGBTQ+ community have been feeling increasingly “isolated” from the rest of the world due to Republicans pushing anti-LGBTQ+ rhetoric.

Now more than ever, with the return of President Donald Trump to office, the LGBTQ+ community has raised awareness about resources available to help individuals feel safe and start a family. Services like PregnantTogether, a virtual LGBTQ+ community, is making a difference for couples who want a family, but don’t have many resources.

Marea Goodman, the founder and licensed midwife, said this inspired them to create PregnantTogether.

“There really hasn’t been a space for queer folks growing our families. And something that I hear over and over from clients and community members and that I experienced myself when I was in the process of trying to get pregnant and being pregnant is really just the sense of isolation that many of us experience going through this process,” Goodman said.

PregnantTogether offers “tons of recorded, self-paced content and courses about every stage of preconception, pregnancy, birth and postpartum.” They also offer discounts on fertility tests, sperm bank donor catalogs and prenatal vitamins, among other things. People with insurance can either use their Health Savings Account (HSA) or a Flexible Spending Account (FSA) for the services as well.

Goodman said in an introductory video, that going down a rabbit hole on Google about fertility questions is one of the reasons PregnantTogether exists. Goodman argues that “five to ten-minute” once-a-month doctor or mid-wife sessions for a prenatal visit aren’t enough to truly help queer people through this process.

That sentiment of not having adequate support is echoed by Jenai Mars, a member of the PregnantTogether community, who says the journey to parenthood can feel isolating, with few role models or community spaces to turn to for support.

“I am one of the first my family and friends, let alone queer community, to go through the process of building a family. My spouse and I felt somewhat isolated and overwhelmed as we began the [trying to conceive] process and wanted to connect with other queer folks going through similar experiences,” she told the Los Angeles Blade.

According to Mars, the community has proven to be a lifeline during the rise of political discourse throughout the United States.

“PregnantTogether has been such an important source of community, comfort, education and inspiration during my pregnancy and through these tough political times,” she said of PregnantTogether. “I’m so grateful for the deep connections and friendship this community has helped foster both in person in NYC and across the country and world.”

Despite the current political climate, LGBTQ+ individuals aren’t putting off their family planning in 2025. In fact, they are tapping into the resources at PregnantTogether.

“As a queer, genderqueer person hoping to start building my family this year, PregnantTogether has meant so much to me,” said Vera Leone, a member of the community. “I have gotten ideas and inspiration for the next steps on my path, and resources for supporting the questions I’m working through around sourcing donor genetic material and processing the grief that inevitably accompanies life, loving, conception and beyond.”

Creating a family is essential for Leone, who moved to the Midwest after having moved around a lot, and “as an elder millennial coming to fully explore my queerness only later in life,” said Leone. “I am still in the process of growing into a strong queer community and networks of care rooted in my local city,” they added.

With growing political hostility and a shift away from traditional social media spaces, building community has become more essential than ever for the LGBTQ+ community seeking support and connection, Goodman noted.

“We’ve always obviously been like a minority group. But I think, especially now being kind of like the target of much of the negative political discourse, coming together in community is so essential for our mental health,” Goodman said. “I’m also hearing that people are not feeling like they want to participate in Instagram and Facebook and like the public social media sphere in the same way.”

“In these times of fear and overwhelm and attacks on the very existence of trans people, I feel really called towards building all the connection, support and collective care and resilience that I can,” Leone said of the PregnantTogether community.

Online Culture

Online safe spaces for queer youth increasingly at risk

“Social media [are] where young people increasingly turn to get information about their community, their history, their bodies & themselves”

By Henry Carnell | MIAMI, Fla. – “They had LGBTQ-inclusive books in every single classroom and school library,” Maxx Fenning says of his high school experience. “They were even working on LGBTQ-specific course codes to get approved by the state,” he said, describing courses on queer studies and LGBTQ Black history.

No, Fenning didn’t grow up in Portland or a Boston suburb. Fenning graduated from a South Florida high school in 2020. Florida’s transformation from mostly affirming to “Don’t Say Gay” has been swift, he says. “It feels like a parallel universe.”

Fenning, who just graduated from the University of Florida, follows the developments closely as the executive director of PRISM FL Inc., a youth-led LGBTQ nonprofit he founded at 17. “I’ve watched so many of the things that I kind of took advantage of be stripped away from all of the students that came after me,” Fenning says. “It’s one thing to be in an environment that’s not supportive of you. It’s another thing to be in an environment that’s supportive of you and then watch it fall apart.”

“It’s just gut-wrenching,” Fenning explained, describing how Florida’s increasingly hostile legislation has transformed the state he has lived in most of his life.

Most recently, Florida passed HB3, “Online Protections for Minors,” which bans youth under 14 from having social media accounts. Youths aged 14 and 15 need parental consent before getting accounts and any minor must be protected from “harmful content” online.

Unlike the previous legislation, which came predominately from the right and directly targeted issues like gender-affirming healthcare or DEI, HB3 is part of a bipartisan push across the country to regulate social media, specifically for youth. HB3 was co-sponsored by Michele K. Rayner, the openly queer Black member of the Florida Legislature, alongside many of her colleagues across the aisle. Similar national legislation, like Kids Online Safety Act, includes 68 Democratic and Republican sponsors.

Shae Gardner, policy director at LGBT Tech, explains that this legislation disproportionately harms LGBTQ youth, regardless of intentions or sponsors.

Gardner says that while all these bills claim they are for the safety of kids, for LGBTQ youth, “you are putting them at risk if you keep them offline.” She explains that “a majority of LGBTQ youth do not have access to affirming spaces in their homes and their communities. They go online to look like that. A majority say online spaces are affirming.”

Research by the Trevor Project, which reports that more than 80% of LGBTQ youth “feel safe and understood in specific online spaces” backs this up. Specific online spaces that are under target from legislation, like TikTok, are disproportionately spaces where LGBTQ youth of color feel safest.

“For LGBTQ people, social media has provided spaces, which are, at once both public and private, that encourage, and enhance … a great deal of self-expression that is so important for these communities,” confirms Dr. Paromita Pain, professor, Global Media Studies & Cybersecurity at University of Nevada, Reno. She is the editor of the books “Global LGBTQ Activism” and “LGBTQ digital cultures.”

Fenning emphasizes that with bills like “Don’t Say Gay,” in Florida — and other states including North Carolina, Arkansas, Iowa, and Indiana — LGBTQ youth have less access to vital information about their health and history. “Social media [are] where young people increasingly turn to get information about their community, their history, their bodies and themselves.”

At PRISM, Fenning works to get accurate, fact-backed information to Florida youth through these pathways, ranging from information on health and wellbeing to LGBTQ history to current events. The feedback has been overwhelmingly positive. Often youth tell him “I wish I learned this in school,” which is a bittersweet feeling for Fenning since it represents how much young LGBTQ youth are missing out on in their education.

Morgan Mayfaire, executive director of TransSOCIAL, a Florida advocacy group, said that these internet bans are an extension of book bans, because when he was a teen, books were his pathway into the LGBTQ community. “For me it was the library and the bookstores that we knew were LGBTQ friendly.” Now 65, Mayfaire understands that “kids today have grown up with the internet. That’s where they get all their information. You start closing this off, and you’re basically boxing them in and closing every single avenue that they have. What do you think is going to happen? Of course, it’s going to have an impact emotionally and mentally.”

Fenning says that social media and the internet were powerful to him as a teen. “I was able to really come into my own and learn about myself also through social media. It was really powerful for me, building a sense of self.” Gardner agrees, sharing that legislation like this, which would have limited “15-year-old me, searching ‘if it was OK to be gay’ online, would have stagnated my journey into finding out who I was.”

Gardner also explains that many of the bills, like HB3, limit content that is “harmful” or “obscene” but do not specifically define what that content is. Those definitions can be used to limit LGBTQ content.

“Existing content moderation tools already over-censor LGBTQ+ content and users,” says Gardner, “they have a hard time distinguishing between sexual content and LGBTQ+ content.” Pain emphasizes that this is no accident, “there are algorithms that have been created to specifically keep these communities out.”

With the threat of fines and litigation from HB3, says Gardner, “moderation tools and the platforms that use them is only going to worsen,” especially since the same legislators may use the same terms to define other queer content like family-friendly drag performances.

In addition to being biased, it has devastating effects on LGBTQ youth understanding of their sense of identity, Fenning explains. “That perception of queer people as being overly sexual or their relationships and love being inherently sexual in a way that other relationships aren’t does harm to our community.”

Gardner acknowledges that online safety has a long way to go — pointing to online harassment, cybercrime, and data privacy—but that these bills are not the correct pathways. She emphasizes “everybody’s data could be better protected, and that should be happening on a federal level. First and foremost, that should be the floor of protection.”

She also emphasizes that content moderation has a long way to go from targeting the LGBTQ community to protecting it. “Trans users are the most harassed of any demographic across the board. That is the conversation I wish we were having, instead of just banning kids from being online in the first place.”

Being queer on the ground in Florida is scary. “The community is very fearful. This [legislation] has a big impact on us,” explains Mayfaire.

“I mean, it sucks. Right?” Fenning chuckles unhappily, “to be a queer person in Florida. In a state that feels like it is just continuously doing everything it can to destroy your life and all facets and then all realms.”

Despite the legislative steamrolling, several court wins and coordinated action by LGBTQ activists help residents see a brighter future. “There’s a weird tinge of hope that that has really been carrying so many queer people and I know myself especially this year as we’re seeing the rescinding of so many of these harmful policies and laws.”

For example, this March, Florida settled a challenge to its “Don’t Say Gay” legislation that significantly lessens its impact. Already, experts warn that HB3 will face legal challenges.

Pain emphasizes that social media is central to LGBTQ activism, especially in Florida. “There have been examples of various movements, where social media has been used extremely effectively, to put across voices to highlight issues that they would not have otherwise had a chance to talk about,” she says, specifically citing counteraction to “Don’t Say Gay.” That is another reason why legislation like this disproportionately harms LGBTQ people and other minority groups, it limits their ability to organize.

Fenning emphasizes that HB3 directly attacks spaces like PRISM, which do not just share information for the LGBTQ community, but provide spaces for them. “Foundationally it provides an opportunity for the community,” he says, but more than anything, it provides a space, where “you can you can learn from your queer ancestors, so to speak, and take charge.” And that is invaluable.

******************************************************************************************

Henry Carnell is a reporter and researcher specializing in climate, science, technology, disinformation, and, sometimes, the LGBTQ community.

He is also a Williams College graduate and Mother Jones’ Ben Bagdikian 2023-2024 Editorial Fellow. He is also a fellow at the Washington Blade through The Digital Equity Local Voices Lab. Previously, they have worked at MIT Press and 5280 Magazine.

His reporting has appeared in Them, Mother Jones, Inside Climate News, 5280, and LGBTQ Nation.

This story is part of the Digital Equity Local Voices Fellowship lab through News is Out. The lab initiative is made possible with support from Comcast NBCUniversal.

Online Culture

LGBTQ+ blogsite Joe.My.God celebrates two decades of impact

There is little doubt his reach and his impact will continue to be felt by the LGBTQ+ community, its allies, and beyond

NEW YORK – Twenty years have passed by since journalist, editor, and blogger Joe Jervis created what is arguably the oldest LGBTQ+ politics, culture, lifestyle, and entertainment blog, all from his New York City apartment alongside a feline companion.

In his daily blog Monday, Jervis recounted:

Today marks the twentieth blogiversary of this here website thingy. Counting this one, we’re at 151,117 posts over 20 years, the last 15 of which have been without a full day off, although my posting on weekends is usually at a slower rate. As I’ve said on this day every year, whether I am insanely committed or am insane and should be committed – that is entirely your call.

I appreciate all of you for sticking with me all these years, particularly over the last few years when the entire world was turned upside down by the pandemic.

In this post last year I noted that site traffic has indicated that most of you tend to read JMG during office hours and that continues to be the case, although I suspect not as many of you are reading this in your work-from-home sexy underwear compared to the lockdown years.

Looking back on the last year of posts, it’s evident that the blessed relief from the twin horrors of the Trump years and the pandemic have arguably been matched by unprecedented and vicious attacks on LGBTQ rights.

Bans of LGBTQ books, “Don’t Say Gay” laws, the seemingly unstoppable erosion of trans rights, the rise of ardently anti-LGBTQ Christian nationalism, attacks on Pride and drag events by literal Nazis, and the ever-looming threat to same-sex marriage dominated our posts on LGBTQ issues over the last year. We’ve won some of these battles, but we’ve lost a frightening number of them.

[…] On behalf of myself and our tireless tech support guy Jack, who deals with a lot of stupid nonsense at stupid hours, you have our eternal thanks for being part of the rollicking community of “homosexual buccaneers” and straight allies that fight the good fight.

Thank you for enduring my typos, my “Don’t Panic” messages, and for sending in news items. I get too many emails to respond to them all, but all of them are very appreciated. The ride will likely only get rougher from here until November, so hang on. We’ve got the kids and righteousness on our side.

Help yourself to some punch and cookies. Please don’t let the cat out. And onward to year TWENTY-ONE!

The veteran writer is also very much an activist on issues that impact the LGBTQ+ community both at home in the United States and abroad. He has tirelessly campaigned to advance same-sex marriage, military service for the LGBTQ+ community, battling the far right attacks on the humanity of LGBTQ+ people, and most recently while defending the drag community against unfounded lies, smears, and labeling by the conservative family values politicos and leaders of the various anti-LGBTQ+ hate groups- has pointed out the utter hypocrisy of those very people maintaining a running scorecard of religious leaders being arrested for criminal sexual actions against children. Jervis points out in post after post that not a single drag performer has been arrested nor charged with those types of crimes.

As the attacks on the transgender community, drag community, and even LGBTQ+ allies worsen under the current political environment, Jervis maintains his ‘call-to-arms’ writing:

“On behalf of myself and our tireless tech support guy Jack, who deals with a lot of stupid nonsense at stupid hours, you have our eternal thanks for being part of the rollicking community of “homosexual buccaneers” and straight allies that fight the good fight. The ride will likely only get rougher from here, so hang on. We’ve got the kids and righteousness on our side.”

The politically astute Jervis has certainly gained his share of detractors, but over the past 20 years and with an aggregate total of over nearly 1.3M visitors a month to what he refers to as “this here website thingy,” there is little doubt his reach and his impact will continue to be felt by the LGBTQ+ community, its allies, and beyond.

Online Culture

LGBTQ+ casual encounters app Grindr switches up

With Roam, you will be able to temporarily place your profile in a new location ahead of travel and form local connections before you arrive.

WEST HOLLYWOOD – The world’s largest LGBTQ+ casual encounters app announced last week that it was introducing a new feature that expands its connective capacities. The West Hollywood based company noted that users have identified new uses for the app, ranging from long-term relationships and networking to local discovery and travel advice.

Grindr says that its app is the connective tissue for the gay community all over the world, including in many countries where it is illegal to be gay.

In a company blog article written by George Arison, Grindr’s Chief Executive Officer published on April 16, he stated:

For decades, the global LGBTQ+ community has physically connected in cities around the world, creating hubs, or Gayborhoods, for local gay, bi, trans, and queer people to freely express themselves, enjoy a sense of safety and intimacy, and foster community through spaces created specifically for us.

However, not all of us are able to access a physical Gayborhood.

[…] We’re announcing Roam, a new feature that allows you to temporarily place your profile in a new location ahead of a trip. Roam is the first of many future Gayborhood features we are launching, and it unlocks new travel functionality to bring you one step closer to other Grindr users around the globe.

Roam allows you to ‘visit’ a new geography to explore other profiles, be seen by members of the local community, and chat with people in that location. Roam is currently in testing in several markets and will be launching broadly later this year.

Social Media Platforms

Instagram battles financial sextortion scams, blurs DM nudity

When sending or receiving these images, people will be directed to safety tips, developed with guidance from experts, about potential risks

Editor’s note: The following article is provided as a public service for readers regarding actions taken by Instagram, a social media platform, dealing with a subject of general interest and concern. The Los Angeles Blade has not verified the information contained herein.

By Meta Public & Media Relations | MENLO PARK, Calif. – Financial sextortion is a horrific crime. We’ve spent years working closely with experts, including those experienced in fighting these crimes, to understand the tactics scammers use to find and extort victims online, so we can develop effective ways to help stop them.

Today, we’re sharing an overview of our latest work to tackle these crimes. This includes new tools we’re testing to help protect people from sextortion and other forms of intimate image abuse, and to make it as hard as possible for scammers to find potential targets – on Meta’s apps and across the internet. We’re also testing new measures to support young people in recognizing and protecting themselves from sextortion scams.

These updates build on our longstanding work to help protect young people from unwanted or potentially harmful contact. We default teens into stricter message settings so they can’t be messaged by anyone they’re not already connected to, show Safety Notices to teens who are already in contact with potential scam accounts, and offer a dedicated option for people to report DMs that are threatening to share private images. We also supported the National Center for Missing and Exploited Children (NCMEC) in developing Take It Down, a platform that lets young people take back control of their intimate images and helps prevent them being shared online – taking power away from scammers.

Takeaways:

- We’re testing new features to help protect young people from sextortion and intimate image abuse, and to make it more difficult for potential scammers and criminals to find and interact with teens.

- We’re also testing new ways to help people spot potential sextortion scams, encourage them to report and empower them to say no to anything that makes them feel uncomfortable.

- We’ve started sharing more signals about sextortion accounts to other tech companies through Lantern, helping disrupt this criminal activity across the internet.

Introducing Nudity Protection in DMs

While people overwhelmingly use DMs to share what they love with their friends, family or favorite creators, sextortion scammers may also use private messages to share or ask for intimate images. To help address this, we’ll soon start testing our new nudity protection feature in Instagram DMs, which blurs images detected as containing nudity and encourages people to think twice before sending nude images. This feature is designed not only to protect people from seeing unwanted nudity in their DMs, but also to protect them from scammers who may send nude images to trick people into sending their own images in return.

Nudity protection will be turned on by default for teens under 18 globally, and we’ll show a notification to adults encouraging them to turn it on.

When nudity protection is turned on, people sending images containing nudity will see a message reminding them to be cautious when sending sensitive photos, and that they can unsend these photos if they’ve changed their mind.

Anyone who tries to forward a nude image they’ve received will see a message encouraging them to reconsider.

When someone receives an image containing nudity, it will be automatically blurred under a warning screen, meaning the recipient isn’t confronted with a nude image and they can choose whether or not to view it. We’ll also show them a message encouraging them not to feel pressure to respond, with an option to block the sender and report the chat.

When sending or receiving these images, people will be directed to safety tips, developed with guidance from experts, about the potential risks involved. These tips include reminders that people may screenshot or forward images without your knowledge, that your relationship to the person may change in the future, and that you should review profiles carefully in case they’re not who they say they are. They also link to a range of resources, including Meta’s Safety Center, support helplines, StopNCII.org for those over 18, and Take It Down for those under 18.

Nudity protection uses on-device machine learning to analyze whether an image sent in a DM on Instagram contains nudity. Because the images are analyzed on the device itself, nudity protection will work in end-to-end encrypted chats, where Meta won’t have access to these images – unless someone chooses to report them to us.

“Companies have a responsibility to ensure the protection of minors who use their platforms. Meta’s proposed device-side safety measures within its encrypted environment is encouraging. We are hopeful these new measures will increase reporting by minors and curb the circulation of online child exploitation.” — John Shehan, Senior Vice President, National Center for Missing & Exploited Children.

“As an educator, parent, and researcher on adolescent online behavior, I applaud Meta’s new feature that handles the exchange of personal nude content in a thoughtful, nuanced, and appropriate way. It reduces unwanted exposure to potentially traumatic images, gently introduces cognitive dissonance to those who may be open to sharing nudes, and educates people about the potential downsides involved. Each of these should help decrease the incidence of sextortion and related harms, helping to keep young people safe online.” — Dr. Sameer Hinduja, Co-Director of the Cyberbullying Research Center and Faculty Associate at the Berkman Klein Center at Harvard University.

Preventing Potential Scammers from Connecting with Teens

We take severe action when we become aware of people engaging in sextortion: we remove their account, take steps to prevent them from creating new ones and, where appropriate, report them to the NCMEC and law enforcement. Our expert teams also work to investigate and disrupt networks of these criminals, disable their accounts and report them to NCMEC and law enforcement – including several networks in the last year alone.

Now, we’re also developing technology to help identify where accounts may potentially be engaging in sextortion scams, based on a range of signals that could indicate sextortion behavior. While these signals aren’t necessarily evidence that an account has broken our rules, we’re taking precautionary steps to help prevent these accounts from finding and interacting with teen accounts. This builds on the work we already do to prevent other potentially suspicious accounts from finding and interacting with teens.

One way we’re doing this is by making it even harder for potential sextortion accounts to message or interact with people. Now, any message requests potential sextortion accounts try to send will go straight to the recipient’s hidden requests folder, meaning they won’t be notified of the message and never have to see it. For those who are already chatting to potential scam or sextortion accounts, we show Safety Notices encouraging them to report any threats to share their private images, and reminding them that they can say no to anything that makes them feel uncomfortable.

For teens, we’re going even further. We already restrict adults from starting DM chats with teens they’re not connected to, and in January we announced stricter messaging defaults for teens under 16 (under 18 in certain countries), meaning they can only be messaged by people they’re already connected to – no matter how old the sender is. Now, we won’t show the “Message” button on a teen’s profile to potential sextortion accounts, even if they’re already connected. We’re also testing hiding teens from these accounts in people’s follower, following and like lists, and making it harder for them to find teen accounts in Search results.

New Resources for People Who May Have Been Approached by Scammers

We’re testing new pop-up messages for people who may have interacted with an account we’ve removed for sextortion. The message will direct them to our expert-backed resources, including our Stop Sextortion Hub, support helplines, the option to reach out to a friend, StopNCII.org for those over 18, and Take It Down for those under 18.

We’re also adding new child safety helplines from around the world into our in-app reporting flows. This means when teens report relevant issues – such as nudity, threats to share private images or sexual exploitation or solicitation – we’ll direct them to local child safety helplines where available.

Fighting Sextortion Scams Across the Internet

In November, we announced we were founding members of Lantern, a program run by the Tech Coalition that enables technology companies to share signals about accounts and behaviors that violate their child safety policies.

This industry cooperation is critical, because predators don’t limit themselves to just one platform – and the same is true of sextortion scammers. These criminals target victims across the different apps they use, often moving their conversations from one app to another. That’s why we’ve started to share more sextortion-specific signals to Lantern, to build on this important cooperation and try to stop sextortion scams not just on individual platforms, but across the whole internet.

*****************************************************************************************

The preceding article was previously published by Instagram here: (Link)

Social Media Platforms

Social Media platforms still lagging on critical LGBTQ+ protections

All Social Media platforms should have policy prohibitions against harmful so-called “Conversion Therapy” content

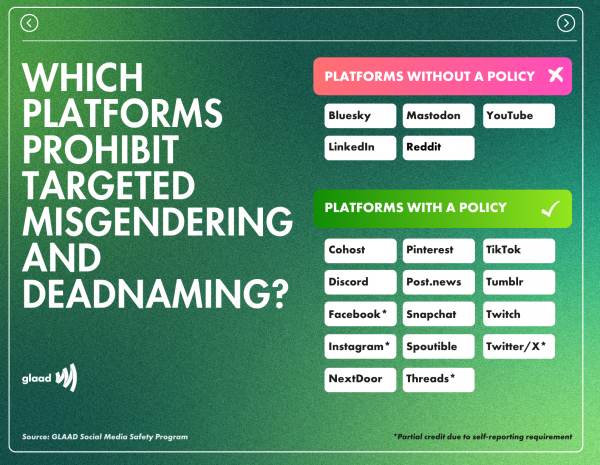

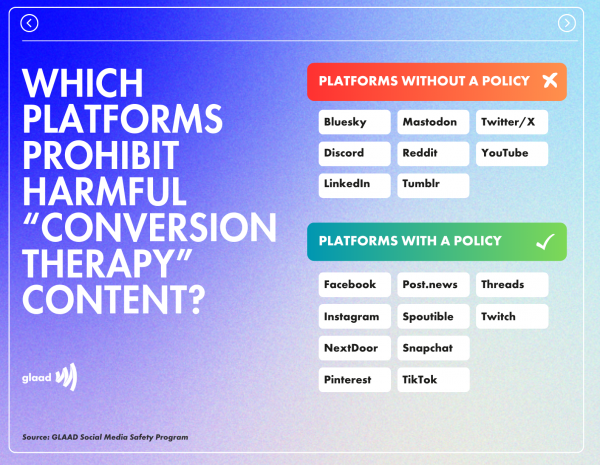

By Leanna Garfield & Jenni Olson | NEW YORK – GLAAD, the world’s largest lesbian, gay, bisexual, transgender, and queer (LGBTQ) media advocacy organization released new reports documenting the current state of two important LGBTQ safety policy protections on social media platforms.

The reports show how numerous platforms and apps (including, most recently Snapchat) are increasingly adopting two LGBTQ safety protections that GLAAD’s Social Media Safety Program advocates as best practices for the industry: firstly, expressly stated policies prohibiting targeted misgendering and deadnaming of transgender and nonbinary people (i.e. intentionally using the wrong pronouns or using a former name to express contempt); and secondly, expressly stated policies prohibiting the promotion and advertising of harmful so-called “conversion therapy” (a widely condemned practice attempting to change an LGBTQ person’s sexual orientation or gender identity which has been banned or restricted in dozens of countries and US states).

Major companies that have such LGBTQ policy safeguards include: TikTok, Twitch, Pinterest, NextDoor, and now Snapchat.

Companies lagging behind and failing to provide such protections include: YouTube, BlueSky, LinkedIn, Reddit, and Mastodon. X/Twitter and Meta’s Instagram, Facebook, and Threads have received partial credit due to “self-reporting” requirements.

“Now is the time for all social media platforms and tech companies to step up and prioritize LGBTQ safety,” said GLAAD President and CEO Sarah Kate Ellis. “We urge all social media platforms to adopt, and enforce, these policies and to protect LGBTQ people — and everyone.”

Companies will have another opportunity to be acknowledged for updating their policies later this year. To be released this summer, GLAAD’s annual Social Media Safety Index report will feature an updated version of the charts.

Conversion Therapy

The widely debunked and harmful practice of so-called “conversion therapy” falsely claims to change an LGBTQ person’s sexual orientation, gender identity, or gender expression, and has been condemned by all major medical, psychiatric, and psychological organizations including the American Medical Association and American Psychological Association. Globally, there has been a growing movement to ban “conversion therapy” at the national level. As of February 2024, 14 countries have such bans, including Canada, France, Germany, Malta, Ecuador, Brazil, Taiwan, and New Zealand. In the United States, 22 states and the District of Columbia have restrictions in place.

Expressing concurrence with GLAAD’s Social Media Safety Program guidance, IFTAS (the non-profit supporting the Fediverse moderator community) stated in a February 2024 announcement: “Due to the widespread and insidious nature of expressing anti-transgender sentiments in bad faith, it’s imperative to have specific policy addressing this issue.” Further explaining the rationale behind such policies, the IFTAS announcement continues: “This approach is considered a best practice for two key reasons: it offers clear guidance to users, and it assists moderators in recognizing and understanding the intent behind such statements. It’s important to reiterate that the focus is not about accidentally getting someone’s pronouns wrong. Rather, our concern centers on deliberate and targeted acts of hate and harassment.”

Conveying appreciation to companies but also highlighting the need for policy enforcement, GLAAD’s new reporting notes that while the policies mark significant progress: “These new policy additions do not solve the extremely significant other related issue of policy enforcement (a realm in which many platforms are known to be doing a woefully inadequate job).”

There is broad consensus and building momentum toward protecting LGBTQ people, and especially LGBTQ youth, from this dangerous practice. However, “conversion therapy” disinformation, extremist scare-tactic narratives, and the profit-driven promotion of such services continues to be widespread on social media platforms, via both organic content and advertising. And, as a December 2023 Trevor Project report reveals, “conversion therapy” continues to happen in nearly every US state.

Thankfully, more tech companies and social media platforms are taking leadership to address the spread of content that promotes and advertises “conversion therapy.” In December 2023, the social platform Post added an express prohibition of such content to their policies, and in January 2024 Spoutible did the same. That same month, in response to key stakeholder guidance from GLAAD, IFTAS (the non-profit supporting the Fediverse moderator community) crafted sample policy language and implemented an “IFTAS LGBTQ+ Safety Server Pledge” system for the Fediverse, in which servers can sign-on confirming they have incorporated a policy prohibiting both the promotion of “conversion therapy” content and targeted misgendering and deadnaming. In February, Snapchat also added both prohibitions into their Hateful Content and Harmful False or Deceptive Information community guidelines policies.

GLAAD President and CEO Sarah Kate Ellis acknowledged this recent progress, saying to The Advocate: “Adopting new policies prohibiting so-called ‘conversion therapy’ content puts these companies ahead of so many others. GLAAD urges all social media platforms to adopt, and enforce, this policy and protect their LGBTQ users.”

A January 2024 report from the Global Project on Hate & Extremism (GPAHE) illuminates how many social media companies and search engines are failing to mitigate harmful content and ads promoting “conversion therapy.” The report outlines the enormous amount of work that needs to be done, and offers many examples of simple solutions that platforms can and should urgently implement. Recommendations from the report are listed below.

In February 2022, GLAAD worked with TikTok to have the platform add an explicit prohibition of content promoting “conversion therapy.” TikTok updated its community guidelines to include the following: “Adding clarity on the types of hateful ideologies prohibited on our platform. This includes … content that supports or promotes conversion therapy programs. Though these ideologies have long been prohibited on TikTok, we’ve heard from creators and civil society organizations that it’s important to be explicit in our Community Guidelines.”

In 2022, GLAAD also urged both YouTube and Twitter (now X) to add an express prohibition of “conversion therapy” into their content and ad guidelines. While X does not currently have such a policy, YouTube, with the assistance of its AI systems, does mitigate “conversion therapy” content by showing an information panel from the Trevor Project that reads: “Conversion therapy, sometimes referred to as ‘reparative therapy,’ is any of several dangerous and discredited practices aimed at changing an individual’s sexual orientation or gender identity.” However, unlike TikTok and Meta, YouTube does not include an explicit prohibition in its Hate Speech Policy.

Meta’s Facebook and Instagram (and by extension Threads which is guided by Instagram’s policies) currently do have such a prohibition (against: “Content explicitly providing or offering to provide products or services that aim to change people’s sexual orientation or gender identity.”). However it is listed separately from the company’s standard three tiers of content moderation consideration as requiring, “additional information and/or context to enforce.” GLAAD has recommended that it be elevated to a higher priority tier. In addition to this content policy, Meta’s Unrealistic Outcomes ad standards policy also prohibits: “Conversion therapy products or services. This includes but is not limited to: Products aimed at offering or facilitating conversion therapy such as books, apps or audiobooks; Services aimed at offering or facilitating conversion therapy such as talk therapy, conversion ministries or clinical therapy; Testimonials of conversion therapy, specifically when posted or boosted by organizations that arrange and provide such services.”

Among other platforms, it is notable that the community guidelines of both Pinterest and NextDoor include a prohibition against content promoting or supporting “conversion therapy and related programs.” While Twitch’s community guidelines expressly state that: “regardless of your intent, you may not: Encourage the use of or generally endorsing sexual orientation conversion therapy.” As mentioned above, Post and Spoutible also have amended their policies, with Spoutible’s new guidelines being the most extensive:

Prohibited Content: Any content that promotes, endorses, or provides resources for ‘conversion therapy.’ Content that claims sexual orientation or gender identity can be changed or ‘cured.’ Advertising or soliciting services for ‘conversion therapy.’ Testimonials supporting or promoting the effectiveness of ‘conversion therapy.’

Spoutible’s policy also thoughtfully outlines these exceptions:

Content that discusses ‘conversion therapy’ in a historical or educational context may be allowed, provided it does not advocate for or glorify the practice. Personal stories shared by survivors of ‘conversion therapy,’ which do not promote the practice, may be permissible.

In addition to GLAAD’s advocacy efforts advising platforms to add prohibitions against content promoting “conversion therapy” to their community guidelines, we also urge these companies to effectively enforce these policies.

To clarify even further, all platforms should add express public-facing language prohibiting the promotion of “conversion therapy” to both their community guidelines and advertising services policies. While some platforms have described off-the-record that “conversion therapy” material is prohibited under the umbrella of other policies — policies prohibiting hateful ideologies, for instance — the prohibition of “conversion therapy” promotion should be explicitly stated publicly in their community guidelines and other policies.

When such content is reported, it’s also important for moderators to make judgments about the content in context, and distinguish between harmful content promoting “conversion therapy” versus content that mentions or discusses “conversion therapy” (i.e. counter-speech). As a 2020 Reuters story details, social media platforms can provide a space for “conversion therapy” survivors to share their experiences and find community.

GLAAD also urges all platforms to review and follow the below recommendations from the Global Project on Hate & Extremism (GPAHE):

To protect their users, tech companies must:

- Use common sense when evaluating whether content violates rules on conversion therapy and remember that it is dangerous, and sometimes deadly, to allow pro-conversion therapy material to surface. It is quintessential medical disinformation.

- Invest in non-English, non-American cultural and language resources. The disparity in the findings for non-English users is stark.

- Elevate authoritative resources in the language being used for the terms found in the appendix.

- Incorporate “same-sex attraction” and “unwanted same-sex attraction” into their algorithm that moderates conversion therapy content and elevate authoritative content.

- Create or expand the use of authoritative information boxes about conversion therapy, preferably in the language being used.

- All online systems must keep up with the constant rebranding and use of new terms, in all languages, that the conversion therapy industry uses.

- Refrain from defaulting to English content in non-English speaking countries where possible, and if this is the only content available it must be authoritative and translatable.

- All companies must avail themselves of civil society and subject matter experts to keep their systems current.

- Additional recommendations from previous GPAHE research.

Source: Conversion Therapy Online: The Ecosystem in 2023 (Global Project Against Hate & Extremism, Jan 2024)

An earlier version of this overview first appeared in Tech Policy Press and was adapted from the 2023 GLAAD Social Media Safety Index report. The next report is forthcoming in the summer of 2024.

The preceding article was previously published by GLAAD and is republished by permission.

Social Media Platforms

Meta announces new guidelines for teens on Instagram-Facebook

Implementation of the new polices means teens will see their accounts placed on the most restrictive settings on the platforms

MENLO PARK, Calif. – Social media giant Meta announced Tuesday that new content policies for teens restricting access to inappropriate content including posts about suicide, self-harm and eating disorders on both of its largest platforms, Instagram and Facebook.

In a post on the company blog, Meta wrote:

Take the example of someone posting about their ongoing struggle with thoughts of self-harm. This is an important story, and can help destigmatize these issues, but it’s a complex topic and isn’t necessarily suitable for all young people. Now, we’ll start to remove this type of content from teens’ experiences on Instagram and Facebook, as well as other types of age-inappropriate content. We already aim not to recommend this type of content to teens in places like Reels and Explore, and with these changes, we’ll no longer show it to teens in Feed and Stories, even if it’s shared by someone they follow.

“We want teens to have safe, age-appropriate experiences on our apps,” Meta said.

Implementation of the new polices means teens will see their accounts placed on the most restrictive settings on the platforms, the caveat being that the teen didn’t lie about their age when they set the accounts up.

Other changes the company announced include:

To help make sure teens are regularly checking their safety and privacy settings on Instagram, and are aware of the more private settings available, we’re sending new notifications encouraging them to update their settings to a more private experience with a single tap. If teens choose to “Turn on recommended settings”, we will automatically change their settings to restrict who can repost their content, tag or mention them, or include their content in Reels Remixes. We’ll also ensure only their followers can message them and help hide offensive comments.

In November, California Attorney General Rob Bonta announced the public release of a largely unredacted copy of the federal complaint filed by a bipartisan coalition of 33 attorneys general against Meta Platforms, Inc. and affiliates (Meta) on October 24, 2023.

Co-led by Attorney General Bonta, the coalition is alleging that Meta designed and deployed harmful features on Instagram and Facebook that addict children and teens to their mental and physical detriment.

Highlights from the newly revealed portions of the complaint include the following:

- Mark Zuckerberg personally vetoed Meta’s proposed policy to ban image filters that simulated the effects of plastic surgery, despite internal pushback and an expert consensus that such filters harm users’ mental health, especially for women and girls. Complaint ¶¶ 333-68.

- Despite public statements that Meta does not prioritize the amount of time users spend on its social media platforms, internal documents show that Meta set explicit goals of increasing “time spent” and meticulously tracked engagement metrics, including among teen users. Complaint ¶¶ 134-150.

- Meta continuously misrepresented that its social media platforms were safe, while internal data revealed that users experienced harms on its platforms at far higher rates. Complaint ¶¶ 458-507.

- Meta knows that its social media platforms are used by millions of children under 13, including, at one point, around 30% of all 10–12-year-olds, and unlawfully collects their personal information. Meta does this despite Mark Zuckerberg testifying before Congress in 2021 that Meta “kicks off” children under 13. Complaint ¶¶ 642-811.

The Associated Press reported that critics charge Meta’s moves don’t go far enough.

“Today’s announcement by Meta is yet another desperate attempt to avoid regulation and an incredible slap in the face to parents who have lost their kids to online harms on Instagram,” said Josh Golin, executive director of the children’s online advocacy group Fairplay. “If the company is capable of hiding pro-suicide and eating disorder content, why have they waited until 2024 to announce these changes?”

Online Culture

Did Marvel Comics just reveal a classic X-Men character is trans?

Until now, the X-Men have never had a trans member That may have just changed with the publication of X-Men Blue: Origins #1

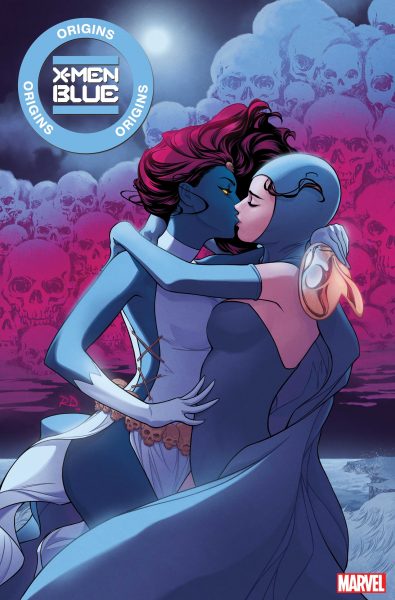

By Rob Salerno | HOLLYWOOD – Marvel Comics’ mutant superheroes the X-Men have always been a metaphor for the struggle against prejudice, boasting a diverse cast of characters that have represented a wide spectrum of characters of different races, sexual orientations, and even species. But until now, the X-Men have never had a trans member.

That may have just changed with the publication of X-Men Blue: Origins #1 by Si Spurrier, Wilton Santos, and Marcus To this week, a story that finally gives the full origin of the mysterious Nightcrawler, who had previously been established as the son of the shape-changing Mystique and a demon named Azazel – all three characters who have appeared in Fox’s X-Men films.

Be warned, spoilers follow from here.

In the new issue, Mystique finally confesses the truth of Nightcrawler’s birth. As Mystique now tells it, she didn’t actually give birth to Nightcrawler – her female partner and longtime lover Destiny did. And Nightcrawler’s father? Well, Nightcrawler’s biological father is actually Mystique, who explains that with her shape-changing powers, she has lived as both male and female.

Does that make Mystique trans? Well, the T-word is never actually uttered in the comic, but Mystique’s own words when Nightcrawler protests that she’s female are a firm rejection of the gender binary.

“Don’t be pathetic. I have lived for years as sapiens males. Years more as females. Do you know what I have observed? They’re all as awful as each other. The only true binary division lies not between the genders or sexes or sexualities. It lies between those who are allowed to be who they wish, and those denied that right,” she says.

The revelation isn’t entirely unprecedented. It has long been known in fan circles that Mystique’s creator and longtime X-Men writer Chris Claremont had intended to reveal that Mystique and Destiny were Nightcrawler’s parents, but that Marvel Comics wouldn’t permit queer characters in their books in the 1980s.

Eventually Marvel reversed that policy, and Mystique and Destiny’s relationship is a main story in current X-Men comics, with Marvel even referring to them as “the greatest love story in mutant history” in a recent press release. Marvel has also published comics set in an alternate universe where Mystique is portrayed as male.

While more openly trans characters have appeared in mainstream comics in recent years, these characters have mostly been relegated to guest-starring and supporting characters. For example, Marvel introduced the trainee member Escapade in the X-Men spinoff comic New Mutants last year, while Marvel’s TV shows Jessica Jones and She-Hulk: Attorney at Law gave both heroines trans assistants.

Mystique is now arguably the highest-profile trans character in mainstream superhero comics, as a major character in comics’ biggest franchise, and having been portrayed by Jennifer Lawrence and Rebecca Romijn in seven X-Men films.

Early reaction to the story has been incredibly positive from X-Men’s queer fandom.

“The heart of the story is Mystique embodying the trans ideal of complete and total bodily autonomy, transcending sex and gender to create life with the woman she loves,” wrote @LokiFreyjasbur on Twitter.

Marvel Comics is wholly owned by Disney.

Marvel advancing a story about a gender-nonconforming character flies in the face of a disturbing recent trend in corporate America of being overly cautious about LGBT issues in the wake of far-right backlash after Bud Light partnered with a trans influencer and Target put up its annual Pride display.

******************************************************************************************

Rob Salerno is a writer and journalist based in Los Angeles, California, and Toronto, Canada.

Online Culture

TikTok video of Cody Conner, a Virginia Beach dad, is going viral

“I’m here to tell you that if your love makes somebody not want to be alive, it’s not love. That’s not love”

VIRGINIA BEACH, Va, – Cody Conner, a father of three kids gave a passionate speech supporting LGBTQ+ kids during the Virginia Beach City Public Schools’ board meeting last month that was uploaded as a TikTok video that has since gone viral.

Conner excoriated the board for considering implementation of Republican Virginia Governor Glenn Youngkin’s anti-trans school policies.

“You are never going to find a right way to do the wrong thing and Governor Youngkin’s policies are wrong,” Conner told the board.

“Never in history have the good guys been the segregationist group pushing to legislate identity,” he said. “Never in history have the good guys been closely connected with and supported by hate groups like the Proud Boys. And the good guys don’t put Hitler quotes for inspiration on the front of their newsletters. News flash: they’re the bad guys. They’re the bad guys supporting bad policy. And if you support the same bad policy, guess what? You’re one of the bad guys too.”

“When you look around and see only the wrong people supporting what you’re doing, you’re doing the wrong thing.“ Now you’ve heard some speakers come up here and say how they love these kids but won’t accept them. I’m here to tell you that if your love makes somebody not want to be alive, it’s not love. That’s not love.

“Some of you are going to get up here and say ‘it’s the law.’ Well, I remind you that slavery and segregation used to be the law here in Virginia.”

“I just knew I couldn’t standby and do nothing, just let it happen and hope everything worked out ok and I also wanted to make sure my kid knew that I would stand up for them,” Conner explains as he begins to tear up. “My big job as a parent is not to tell my children who they are, it’s not to make the decisions for them, it’s not to live their life or decide what their life is going to be, but to show them the best way I know how to walk through this world.”

According to PRIDE journalist Ariel Messman-Rucker, Conner moved his family to Virginia Beach right before Youngkin’s policies passed and he worries about the future of his 13-year-old trans daughter who is now in the 8th grade. The family moved from rural Virginia to Virginia Beach so that their kid, who came out as trans a year ago, would be in a school system that would be supportive, but that all changed because of Youngkin.

The 42-year-old father told PRIDE he’s a quiet person and might not have made the choice to speak up if not for his kids.

Virginia’s Department of Education at the direction of the Governor has set out “model policies” for public schools that require students to use the bathroom and sports team that matches their sex at birth.

The policies require written instruction from parents for a student to use names or gender pronouns that differ from the official record, meaning that teacher can deadname students—refer to them by their prior name—if paperwork isn’t filled out by the parents and it requires the school to inform parents if a student is questioning their identity, according to 13 News Now.

LGBTQ+ rights activists including Equality Virginia have stated these policies will be especially detrimental to LGBTQ+ students who come from conservative non-affirming homes.

The Virginia Beach School Board in a 9-1 vote approved an updated policy for transgender and nonbinary students.

The new policy will require teachers to use pronouns and names that are on official record with exceptions for nicknames commonly associated with the student’s legal name. If a student requests anything else, teachers will be required to report it to the parents. Students must also use bathrooms and participate in sports teams that correspond to their assigned sex.

@beezay22 #CapCut #virginia #virginiabeach #schoolboard #schoolboardmeetings #lgbtqiaplus #transrightsarehumanrights #protecttranskids #stoptransgenocide #fyp ♬ original sound – BeezayDad

-

Viewpoint3 days ago

Viewpoint3 days agoI’m a queer Iranian Jew. Why I stand with Israel during this conflict

-

Health5 days ago

Health5 days agoAPLA opens eighth location in LA County

-

Arts & Entertainment5 days ago

Arts & Entertainment5 days agoKing of Drag competition series hosts premiere party in West Hollywood

-

Congress3 days ago

Congress3 days agoWhite House finds Calif. violated Title IX by allowing trans athletes in school sports

-

Colombia4 days ago

Colombia4 days agoColombia avanza hacia la igualdad para personas trans

-

News4 days ago

News4 days agoDrama unfolds for San Diego Pride ahead of festivities

-

a&e features2 days ago

a&e features2 days agoLatina Turner comes to Bring It To Brunch

-

Books1 day ago

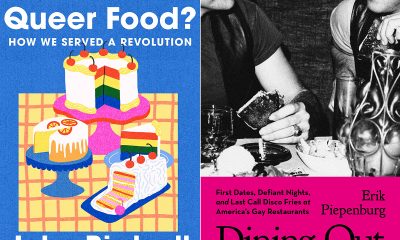

Books1 day agoTwo new books on dining out LGBTQ-style

-

Television2 days ago

Television2 days ago‘White Lotus,’ ‘Severance,’ ‘Andor’ lead Dorian TV Awards noms